import sys

sys.path.insert(0, './userModel')

import my_resnet

import my_hyper_dict59 Hyperparameter Tuning with spotpython and PyTorch Lightning for the Diabetes Data Set Using a User Specified ResNet Model

After importing the necessary libraries, the fun_control dictionary is set up via the fun_control_init function. The fun_control dictionary contains

PREFIX: a unique identifier for the experimentfun_evals: the number of function evaluationsmax_time: the maximum run time in minutesdata_set: the data set. Here we use theDiabetesdata set that is provided byspotpython.core_model_name: the class name of the neural network model. This neural network model is provided byspotpython.hyperdict: the hyperparameter dictionary. This dictionary is used to define the hyperparameters of the neural network model. It is also provided byspotpython._L_in: the number of input features. Since theDiabetesdata set has 10 features,_L_inis set to 10._L_out: the number of output features. Since we want to predict a single value,_L_outis set to 1.

The HyperLight class is used to define the objective function fun. It connects the PyTorch and the spotpython methods and is provided by spotpython.

To access the user specified ResNet model, the path to the user model must be added to the Python path:

In the following code, we do not specify the ResNet model in the fun_control dictionary. It will be added in a second step as the user specified model.

Note, the divergence_threshold is set to 5,000, which is based on some pre-experiments with the Diabetes data set.

from spotpython.data.diabetes import Diabetes

from spotpython.hyperdict.light_hyper_dict import LightHyperDict

from spotpython.fun.hyperlight import HyperLight

from spotpython.utils.init import (fun_control_init, surrogate_control_init, design_control_init)

from spotpython.utils.eda import print_exp_table

from spotpython.spot import Spot

from spotpython.utils.file import get_experiment_filename

PREFIX="606-user-resnet"

data_set = Diabetes()

fun_control = fun_control_init(

PREFIX=PREFIX,

fun_evals=inf,

max_time=1,

data_set = data_set,

divergence_threshold=5_000,

_L_in=10,

_L_out=1)

fun = HyperLight().funIn a second step, we can add the user specified ResNet model to the fun_control dictionary:

from spotpython.hyperparameters.values import add_core_model_to_fun_control

add_core_model_to_fun_control(fun_control=fun_control,

core_model=my_resnet.MyResNet,

hyper_dict=my_hyper_dict.MyHyperDict)The method set_hyperparameter allows the user to modify default hyperparameter settings. Here we modify some hyperparameters to keep the model small and to decrease the tuning time.

from spotpython.hyperparameters.values import set_hyperparameter

set_hyperparameter(fun_control, "optimizer", [ "Adadelta", "Adam", "Adamax"])

set_hyperparameter(fun_control, "l1", [3,4])

set_hyperparameter(fun_control, "epochs", [3,7])

set_hyperparameter(fun_control, "batch_size", [4,11])

set_hyperparameter(fun_control, "dropout_prob", [0.0, 0.025])

set_hyperparameter(fun_control, "patience", [2,3])

set_hyperparameter(fun_control, "lr_mult", [0.1, 20.0])

design_control = design_control_init(init_size=10)

print_exp_table(fun_control)| name | type | default | lower | upper | transform |

|----------------|--------|-----------|---------|---------|-----------------------|

| l1 | int | 3 | 3 | 4 | transform_power_2_int |

| epochs | int | 4 | 3 | 7 | transform_power_2_int |

| batch_size | int | 4 | 4 | 11 | transform_power_2_int |

| act_fn | factor | ReLU | 0 | 5 | None |

| optimizer | factor | SGD | 0 | 2 | None |

| dropout_prob | float | 0.01 | 0 | 0.025 | None |

| lr_mult | float | 1.0 | 0.1 | 20 | None |

| patience | int | 2 | 2 | 3 | transform_power_2_int |

| initialization | factor | Default | 0 | 4 | None |Finally, a Spot object is created. Calling the method run() starts the hyperparameter tuning process.

spot_tuner = Spot(fun=fun,fun_control=fun_control, design_control=design_control)

res = spot_tuner.run()Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 95.7 K │ [128, 10] │ [128, 1] │ └───┴────────┴────────────┴────────┴───────┴────────┴───────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 95.7 K

train_model result: {'val_loss': 24029.263671875, 'hp_metric': 24029.263671875}

Milestones: [2, 4, 6]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 310 K │ [128, 10] │ [128, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 310 K

train_model result: {'val_loss': 23935.533203125, 'hp_metric': 23935.533203125}

Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 23620.296875, 'hp_metric': 23620.296875}

Milestones: [2, 4, 6]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 38.8 K │ [16, 10] │ [16, 1] │ └───┴────────┴────────────┴────────┴───────┴────────┴──────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 38.8 K

train_model result: {'val_loss': 23686.23828125, 'hp_metric': 23686.23828125}

Milestones: [32, 64, 96]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 2.5 M │ [1024, 10] │ [1024, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴────────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 2.5 M

train_model result: {'val_loss': 23590.0078125, 'hp_metric': 23590.0078125}

Milestones: [32, 64, 96]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 1.5 M │ [2048, 10] │ [2048, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴────────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 1.5 M

train_model result: {'val_loss': 24738.689453125, 'hp_metric': 24738.689453125}

Milestones: [4, 8, 12]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 191 K │ [256, 10] │ [256, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 191 K

train_model result: {'val_loss': 24000.283203125, 'hp_metric': 24000.283203125}

Milestones: [4, 8, 12]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 382 K │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 382 K

train_model result: {'val_loss': 24577.533203125, 'hp_metric': 24577.533203125}

Milestones: [8, 16, 24]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 23.9 K │ [32, 10] │ [32, 1] │ └───┴────────┴────────────┴────────┴───────┴────────┴──────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 23.9 K

train_model result: {'val_loss': 24449.7421875, 'hp_metric': 24449.7421875}

Milestones: [8, 16, 24]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 155 K │ [64, 10] │ [64, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴──────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 155 K

train_model result: {'val_loss': 24279.90625, 'hp_metric': 24279.90625}

Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 2.5 M │ [1024, 10] │ [1024, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴────────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 2.5 M

train_model result: {'val_loss': 24030.890625, 'hp_metric': 24030.890625}

spotpython tuning: 23590.0078125 [----------] 0.82%. Success rate: 0.00% Milestones: [2, 4, 6]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 38.8 K │ [16, 10] │ [16, 1] │ └───┴────────┴────────────┴────────┴───────┴────────┴──────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 38.8 K

train_model result: {'val_loss': 24261.85546875, 'hp_metric': 24261.85546875}

spotpython tuning: 23590.0078125 [----------] 1.89%. Success rate: 0.00% Milestones: [32, 64, 96]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 5.0 M │ [2048, 10] │ [2048, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴────────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 5.0 M

train_model result: {'val_loss': 24037.138671875, 'hp_metric': 24037.138671875}

spotpython tuning: 23590.0078125 [----------] 2.83%. Success rate: 0.00% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20727.541015625, 'hp_metric': 20727.541015625}

spotpython tuning: 20727.541015625 [----------] 3.56%. Success rate: 25.00% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 18110.35546875, 'hp_metric': 18110.35546875}

spotpython tuning: 18110.35546875 [----------] 4.64%. Success rate: 40.00% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 19513.134765625, 'hp_metric': 19513.134765625}

spotpython tuning: 18110.35546875 [#---------] 5.87%. Success rate: 33.33% Milestones: [32, 64, 96]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 5.0 M │ [2048, 10] │ [2048, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴────────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 5.0 M

train_model result: {'val_loss': 24598.349609375, 'hp_metric': 24598.349609375}

spotpython tuning: 18110.35546875 [#---------] 7.37%. Success rate: 28.57% Milestones: [32, 64, 96]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20484.203125, 'hp_metric': 20484.203125}

spotpython tuning: 18110.35546875 [#---------] 8.38%. Success rate: 25.00% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 23700.240234375, 'hp_metric': 23700.240234375}

spotpython tuning: 18110.35546875 [#---------] 9.75%. Success rate: 22.22% Using spacefilling design as fallback.

Milestones: [32, 64, 96]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 155 K │ [64, 10] │ [64, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴──────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 155 K

train_model result: {'val_loss': 24304.251953125, 'hp_metric': 24304.251953125}

spotpython tuning: 18110.35546875 [#---------] 11.26%. Success rate: 20.00% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 2.5 M │ [1024, 10] │ [1024, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴────────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 2.5 M

train_model result: {'val_loss': 22364.47265625, 'hp_metric': 22364.47265625}

spotpython tuning: 18110.35546875 [#---------] 12.52%. Success rate: 18.18% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 23425.896484375, 'hp_metric': 23425.896484375}

spotpython tuning: 18110.35546875 [#---------] 13.88%. Success rate: 16.67% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 621 K │ [256, 10] │ [256, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 621 K

train_model result: {'val_loss': 21259.501953125, 'hp_metric': 21259.501953125}

spotpython tuning: 18110.35546875 [##--------] 15.07%. Success rate: 15.38% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20576.791015625, 'hp_metric': 20576.791015625}

spotpython tuning: 18110.35546875 [##--------] 16.48%. Success rate: 14.29% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 22856.45703125, 'hp_metric': 22856.45703125}

spotpython tuning: 18110.35546875 [##--------] 17.80%. Success rate: 13.33% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 21497.861328125, 'hp_metric': 21497.861328125}

spotpython tuning: 18110.35546875 [##--------] 19.14%. Success rate: 12.50% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 22453.681640625, 'hp_metric': 22453.681640625}

spotpython tuning: 18110.35546875 [##--------] 20.37%. Success rate: 11.76% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 382 K │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 382 K

train_model result: {'val_loss': 24528.6328125, 'hp_metric': 24528.6328125}

spotpython tuning: 18110.35546875 [##--------] 21.55%. Success rate: 11.11% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 23348.65625, 'hp_metric': 23348.65625}

spotpython tuning: 18110.35546875 [##--------] 22.88%. Success rate: 10.53% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 21292.18359375, 'hp_metric': 21292.18359375}

spotpython tuning: 18110.35546875 [##--------] 24.45%. Success rate: 10.00% Using spacefilling design as fallback.

Milestones: [8, 16, 24]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 765 K │ [1024, 10] │ [1024, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴────────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 765 K

train_model result: {'val_loss': 24059.1328125, 'hp_metric': 24059.1328125}

spotpython tuning: 18110.35546875 [###-------] 25.93%. Success rate: 9.52% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20854.2578125, 'hp_metric': 20854.2578125}

spotpython tuning: 18110.35546875 [###-------] 27.51%. Success rate: 9.09% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 23338.45703125, 'hp_metric': 23338.45703125}

spotpython tuning: 18110.35546875 [###-------] 29.09%. Success rate: 8.70% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20862.421875, 'hp_metric': 20862.421875}

spotpython tuning: 18110.35546875 [###-------] 30.45%. Success rate: 8.33% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 22772.486328125, 'hp_metric': 22772.486328125}

spotpython tuning: 18110.35546875 [###-------] 31.72%. Success rate: 8.00% Using spacefilling design as fallback.

Milestones: [4, 8, 12]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 95.7 K │ [128, 10] │ [128, 1] │ └───┴────────┴────────────┴────────┴───────┴────────┴───────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 95.7 K

train_model result: {'val_loss': 24437.765625, 'hp_metric': 24437.765625}

spotpython tuning: 18110.35546875 [###-------] 33.01%. Success rate: 7.69% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 21311.6953125, 'hp_metric': 21311.6953125}

spotpython tuning: 18110.35546875 [###-------] 34.60%. Success rate: 7.41% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 18749.962890625, 'hp_metric': 18749.962890625}

spotpython tuning: 18110.35546875 [####------] 36.14%. Success rate: 7.14% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20281.53515625, 'hp_metric': 20281.53515625}

spotpython tuning: 18110.35546875 [####------] 37.69%. Success rate: 6.90% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20327.638671875, 'hp_metric': 20327.638671875}

spotpython tuning: 18110.35546875 [####------] 39.24%. Success rate: 6.67% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 21904.189453125, 'hp_metric': 21904.189453125}

spotpython tuning: 18110.35546875 [####------] 40.79%. Success rate: 6.45% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20986.640625, 'hp_metric': 20986.640625}

spotpython tuning: 18110.35546875 [####------] 42.35%. Success rate: 6.25% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 23056.330078125, 'hp_metric': 23056.330078125}

spotpython tuning: 18110.35546875 [####------] 43.90%. Success rate: 6.06% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 18906.537109375, 'hp_metric': 18906.537109375}

spotpython tuning: 18110.35546875 [#####-----] 45.47%. Success rate: 5.88% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 17919.68359375, 'hp_metric': 17919.68359375}

spotpython tuning: 17919.68359375 [#####-----] 47.04%. Success rate: 8.57% Using spacefilling design as fallback.

Milestones: [32, 64, 96]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 77.7 K │ [32, 10] │ [32, 1] │ └───┴────────┴────────────┴────────┴───────┴────────┴──────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 77.7 K

train_model result: {'val_loss': 24016.23046875, 'hp_metric': 24016.23046875}

spotpython tuning: 17919.68359375 [#####-----] 48.65%. Success rate: 8.33% Milestones: [4, 8, 12]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 155 K │ [64, 10] │ [64, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴──────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 155 K

train_model result: {'val_loss': 23703.373046875, 'hp_metric': 23703.373046875}

spotpython tuning: 17919.68359375 [#####-----] 50.08%. Success rate: 8.11% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 21716.666015625, 'hp_metric': 21716.666015625}

spotpython tuning: 17919.68359375 [#####-----] 51.40%. Success rate: 7.89% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20698.615234375, 'hp_metric': 20698.615234375}

spotpython tuning: 17919.68359375 [#####-----] 52.71%. Success rate: 7.69% Using spacefilling design as fallback.

Milestones: [2, 4, 6]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 310 K │ [128, 10] │ [128, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 310 K

train_model result: {'val_loss': 24246.34375, 'hp_metric': 24246.34375}

spotpython tuning: 17919.68359375 [#####-----] 54.00%. Success rate: 7.50% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 24258.3203125, 'hp_metric': 24258.3203125}

spotpython tuning: 17919.68359375 [######----] 55.35%. Success rate: 7.32% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 24197.3515625, 'hp_metric': 24197.3515625}

spotpython tuning: 17919.68359375 [######----] 56.83%. Success rate: 7.14% Milestones: [4, 8, 12]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 310 K │ [128, 10] │ [128, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 310 K

train_model result: {'val_loss': 23864.40625, 'hp_metric': 23864.40625}

spotpython tuning: 17919.68359375 [######----] 58.27%. Success rate: 6.98% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20694.44140625, 'hp_metric': 20694.44140625}

spotpython tuning: 17919.68359375 [######----] 59.90%. Success rate: 6.82% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 22845.552734375, 'hp_metric': 22845.552734375}

spotpython tuning: 17919.68359375 [######----] 61.30%. Success rate: 6.67% Milestones: [32, 64, 96]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 22553.849609375, 'hp_metric': 22553.849609375}

spotpython tuning: 17919.68359375 [######----] 62.85%. Success rate: 6.52% Milestones: [8, 16, 24]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 21646.568359375, 'hp_metric': 21646.568359375}

spotpython tuning: 17919.68359375 [######----] 64.19%. Success rate: 6.38% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 155 K │ [64, 10] │ [64, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴──────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 155 K

train_model result: {'val_loss': 24010.759765625, 'hp_metric': 24010.759765625}

spotpython tuning: 17919.68359375 [#######---] 65.62%. Success rate: 6.25% Using spacefilling design as fallback.

Milestones: [8, 16, 24]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 191 K │ [256, 10] │ [256, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 191 K

train_model result: {'val_loss': 23867.04296875, 'hp_metric': 23867.04296875}

spotpython tuning: 17919.68359375 [#######---] 66.90%. Success rate: 6.12% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 21343.1796875, 'hp_metric': 21343.1796875}

spotpython tuning: 17919.68359375 [#######---] 68.38%. Success rate: 6.00% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 621 K │ [256, 10] │ [256, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 621 K

train_model result: {'val_loss': 21577.259765625, 'hp_metric': 21577.259765625}

spotpython tuning: 17919.68359375 [#######---] 69.86%. Success rate: 5.88% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20096.72265625, 'hp_metric': 20096.72265625}

spotpython tuning: 17919.68359375 [#######---] 71.30%. Success rate: 5.77% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 22248.748046875, 'hp_metric': 22248.748046875}

spotpython tuning: 17919.68359375 [#######---] 72.73%. Success rate: 5.66% Using spacefilling design as fallback.

Milestones: [4, 8, 12]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 23.9 K │ [32, 10] │ [32, 1] │ └───┴────────┴────────────┴────────┴───────┴────────┴──────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 23.9 K

train_model result: {'val_loss': 24082.240234375, 'hp_metric': 24082.240234375}

spotpython tuning: 17919.68359375 [#######---] 74.12%. Success rate: 5.56% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 19655.291015625, 'hp_metric': 19655.291015625}

spotpython tuning: 17919.68359375 [########--] 75.73%. Success rate: 5.45% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 19942.63671875, 'hp_metric': 19942.63671875}

spotpython tuning: 17919.68359375 [########--] 77.13%. Success rate: 5.36% Milestones: [32, 64, 96]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 24305.08984375, 'hp_metric': 24305.08984375}

spotpython tuning: 17919.68359375 [########--] 78.55%. Success rate: 5.26% Milestones: [8, 16, 24]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 621 K │ [256, 10] │ [256, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 621 K

train_model result: {'val_loss': 22457.099609375, 'hp_metric': 22457.099609375}

spotpython tuning: 17919.68359375 [########--] 80.17%. Success rate: 5.17% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20557.021484375, 'hp_metric': 20557.021484375}

spotpython tuning: 17919.68359375 [########--] 81.79%. Success rate: 5.08% Using spacefilling design as fallback.

Milestones: [8, 16, 24]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 1.5 M │ [2048, 10] │ [2048, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴────────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 1.5 M

train_model result: {'val_loss': 23803.66015625, 'hp_metric': 23803.66015625}

spotpython tuning: 17919.68359375 [########--] 83.05%. Success rate: 5.00% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 18272.107421875, 'hp_metric': 18272.107421875}

spotpython tuning: 17919.68359375 [########--] 84.66%. Success rate: 4.92% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 21429.08203125, 'hp_metric': 21429.08203125}

spotpython tuning: 17919.68359375 [#########-] 86.25%. Success rate: 4.84% Using spacefilling design as fallback.

Milestones: [32, 64, 96]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 155 K │ [64, 10] │ [64, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴──────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 155 K

train_model result: {'val_loss': 24519.021484375, 'hp_metric': 24519.021484375}

spotpython tuning: 17919.68359375 [#########-] 87.91%. Success rate: 4.76% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 20769.740234375, 'hp_metric': 20769.740234375}

spotpython tuning: 17919.68359375 [#########-] 89.48%. Success rate: 4.69% Using spacefilling design as fallback.

Milestones: [4, 8, 12]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 23.9 K │ [32, 10] │ [32, 1] │ └───┴────────┴────────────┴────────┴───────┴────────┴──────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 23.9 K

train_model result: {'val_loss': 25010.33203125, 'hp_metric': 25010.33203125}

spotpython tuning: 17919.68359375 [#########-] 90.83%. Success rate: 4.62% Milestones: [8, 16, 24]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 621 K │ [256, 10] │ [256, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 621 K

train_model result: {'val_loss': 21233.41796875, 'hp_metric': 21233.41796875}

spotpython tuning: 17919.68359375 [#########-] 92.14%. Success rate: 4.55% Using spacefilling design as fallback.

Milestones: [8, 16, 24]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 637 │ train │ 765 K │ [1024, 10] │ [1024, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴────────────┴───────────┘

Trainable params: 637 Non-trainable params: 0 Total params: 637 Total estimated model params size (MB): 0 Modules in train mode: 63 Modules in eval mode: 0 Total FLOPs: 765 K

train_model result: {'val_loss': 23673.33984375, 'hp_metric': 23673.33984375}

spotpython tuning: 17919.68359375 [##########] 96.49%. Success rate: 4.48% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 21687.8203125, 'hp_metric': 21687.8203125}

spotpython tuning: 17919.68359375 [##########] 98.04%. Success rate: 4.41% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 1.2 M │ [512, 10] │ [512, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴───────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 1.2 M

train_model result: {'val_loss': 23813.521484375, 'hp_metric': 23813.521484375}

spotpython tuning: 17919.68359375 [##########] 99.72%. Success rate: 4.35% Milestones: [16, 32, 48]┏━━━┳━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━━━━┓ ┃ ┃ Name ┃ Type ┃ Params ┃ Mode ┃ FLOPs ┃ In sizes ┃ Out sizes ┃ ┡━━━╇━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━━━━┩ │ 0 │ layers │ Sequential │ 1.8 K │ train │ 2.5 M │ [1024, 10] │ [1024, 1] │ └───┴────────┴────────────┴────────┴───────┴───────┴────────────┴───────────┘

Trainable params: 1.8 K Non-trainable params: 0 Total params: 1.8 K Total estimated model params size (MB): 0 Modules in train mode: 101 Modules in eval mode: 0 Total FLOPs: 2.5 M

train_model result: {'val_loss': 21133.958984375, 'hp_metric': 21133.958984375}

spotpython tuning: 17919.68359375 [##########] 100.00%. Success rate: 4.29% Done...

Experiment saved to 606-user-resnet_res.pkl59.1 Looking at the Results

59.1.1 Tuning Progress

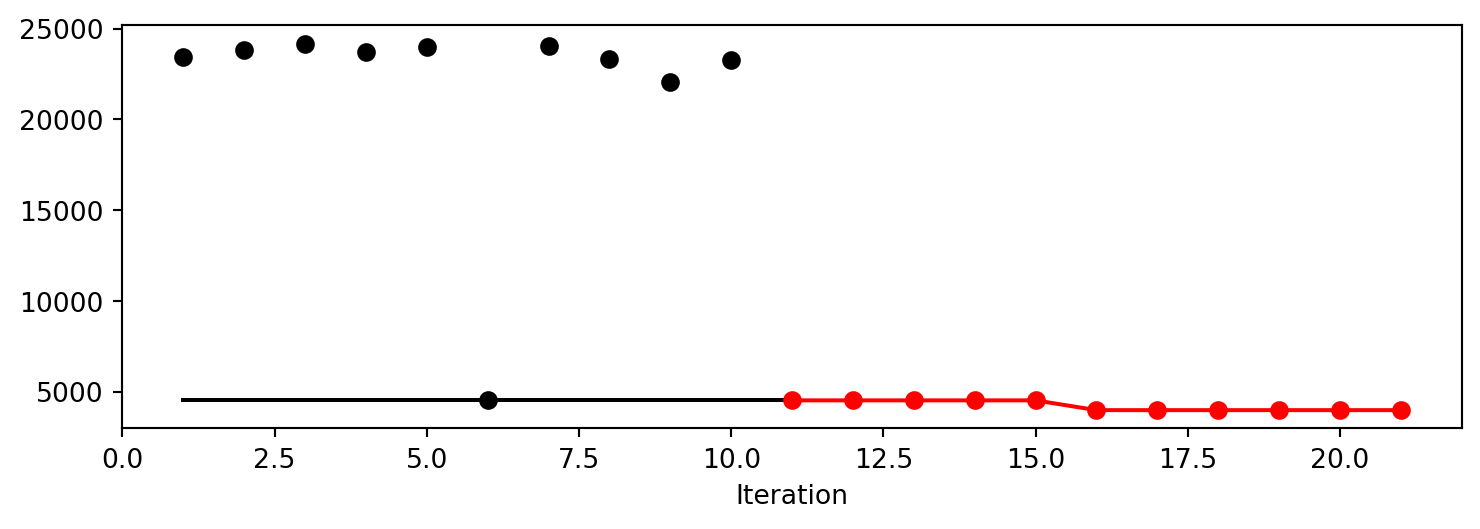

After the hyperparameter tuning run is finished, the progress of the hyperparameter tuning can be visualized with spotpython’s method plot_progress. The black points represent the performace values (score or metric) of hyperparameter configurations from the initial design, whereas the red points represents the hyperparameter configurations found by the surrogate model based optimization.

spot_tuner.plot_progress()

59.1.2 Tuned Hyperparameters and Their Importance

Results can be printed in tabular form.

from spotpython.utils.eda import print_res_table

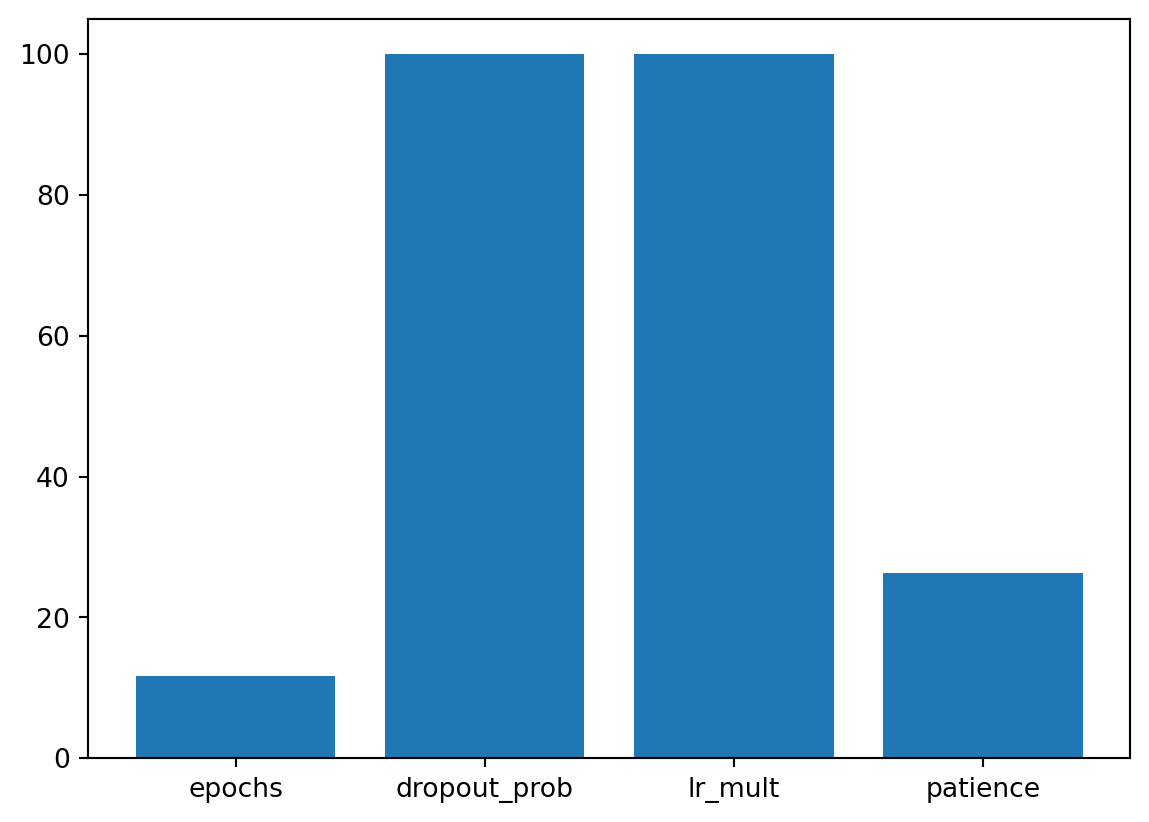

print_res_table(spot_tuner)| name | type | default | lower | upper | tuned | transform | importance | stars |

|----------------|--------|-----------|---------|---------|--------------------|-----------------------|--------------|---------|

| l1 | int | 3 | 3.0 | 4.0 | 4.0 | transform_power_2_int | 42.24 | * |

| epochs | int | 4 | 3.0 | 7.0 | 6.0 | transform_power_2_int | 100.00 | *** |

| batch_size | int | 4 | 4.0 | 11.0 | 9.0 | transform_power_2_int | 0.00 | |

| act_fn | factor | ReLU | 0.0 | 5.0 | LeakyReLU | None | 7.15 | * |

| optimizer | factor | SGD | 0.0 | 2.0 | Adam | None | 1.50 | * |

| dropout_prob | float | 0.01 | 0.0 | 0.025 | 0.025 | None | 1.91 | * |

| lr_mult | float | 1.0 | 0.1 | 20.0 | 18.234657445315417 | None | 0.00 | |

| patience | int | 2 | 2.0 | 3.0 | 3.0 | transform_power_2_int | 0.00 | |

| initialization | factor | Default | 0.0 | 4.0 | Default | None | 3.36 | * |A histogram can be used to visualize the most important hyperparameters.

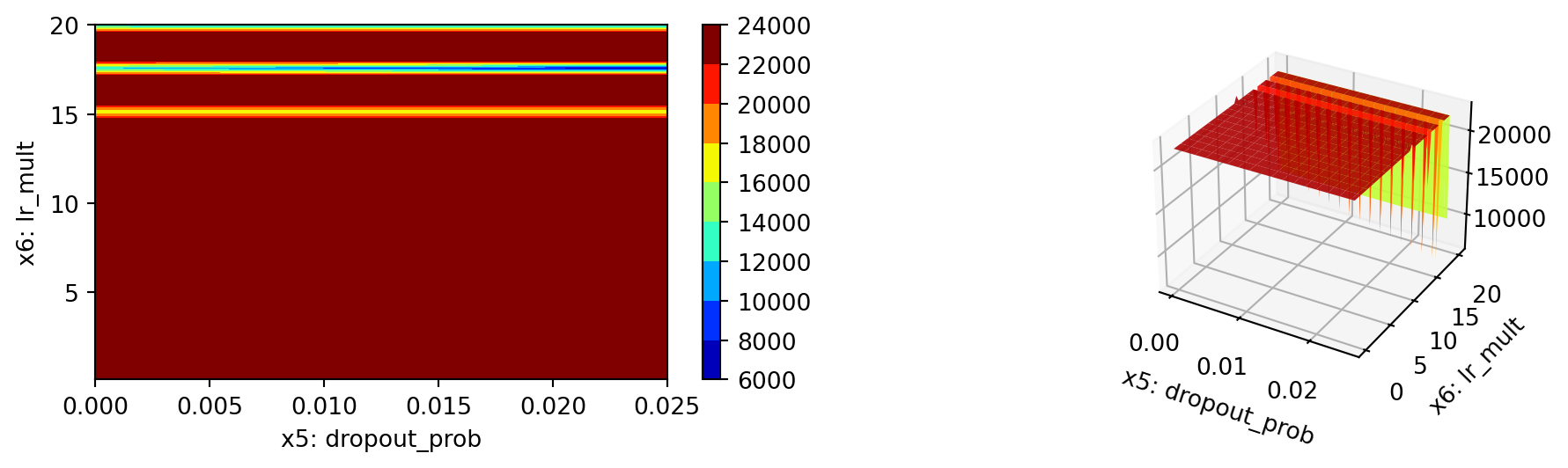

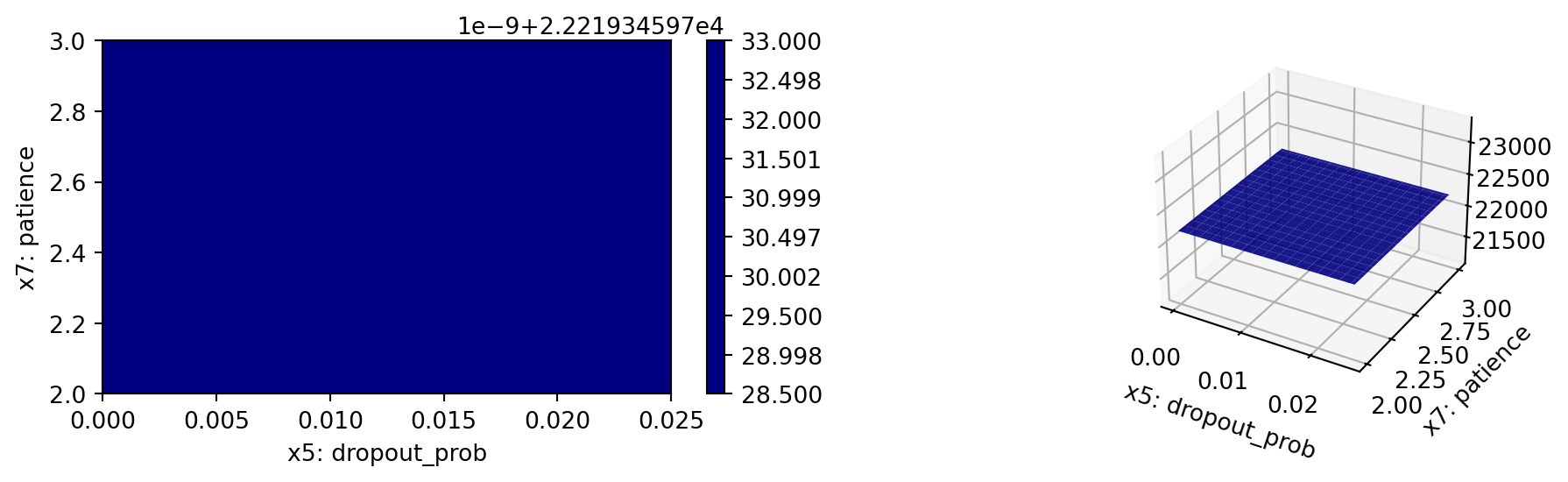

spot_tuner.plot_importance(threshold=1.0)

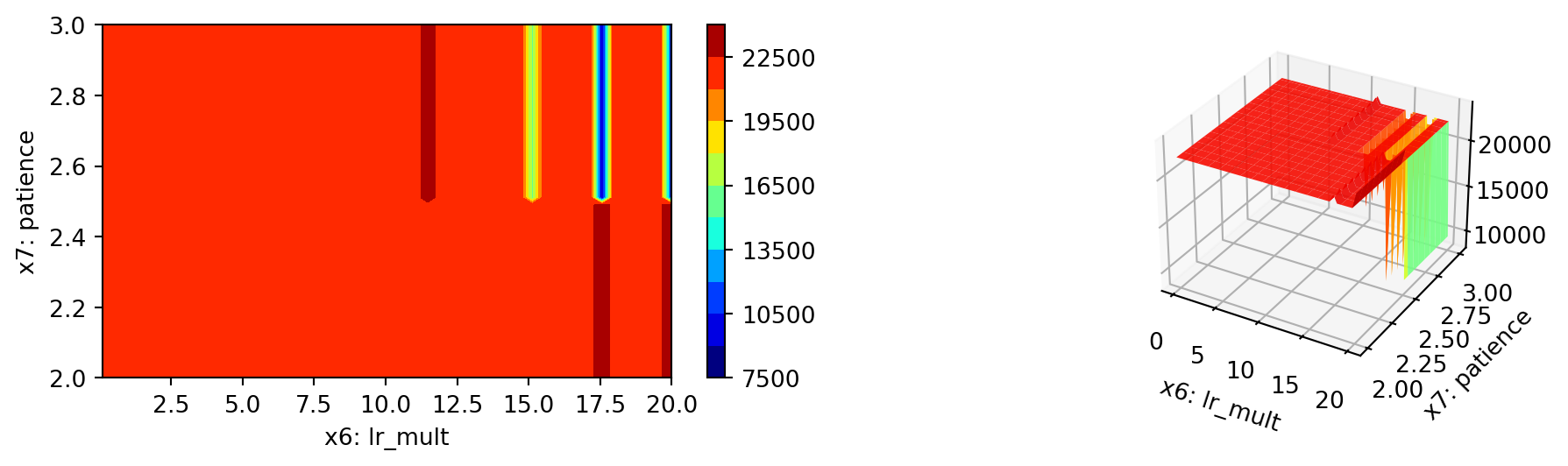

spot_tuner.plot_important_hyperparameter_contour(max_imp=3)l1: 42.235528995106385

epochs: 99.99999999999999

batch_size: 0.002436486726267221

act_fn: 7.148977551281739

optimizer: 1.5005019982789352

dropout_prob: 1.906130030776425

lr_mult: 0.004957229189873098

patience: 0.002325990122205409

initialization: 3.3641496096464074

59.1.3 Get the Tuned Architecture

import pprint

from spotpython.hyperparameters.values import get_tuned_architecture

config = get_tuned_architecture(spot_tuner)

pprint.pprint(config){'act_fn': LeakyReLU(),

'batch_size': 512,

'dropout_prob': 0.025,

'epochs': 64,

'initialization': 'Default',

'l1': 16,

'lr_mult': 18.234657445315417,

'optimizer': 'Adam',

'patience': 8}59.2 Details of the User-Specified ResNet Model

The specification of a user model requires three files:

my_resnet.py: the Python file containing the user specified ResNet modelmy_hyperdict.py: the Python file for loading the hyperparameter dictionarymy_hyperdict.jsonfor the user specified ResNet modelmy_hyperdict.json: the JSON file containing the hyperparameter dictionary for the user specified ResNet model

59.2.1 my_resnet.py

import lightning as L

import torch

from torch import nn

from spotpython.hyperparameters.optimizer import optimizer_handler

import torchmetrics.functional.regression

import torch.optim as optim

class ResidualBlock(nn.Module):

def __init__(self, input_dim, output_dim, act_fn, dropout_prob):

super(ResidualBlock, self).__init__()

self.fc1 = nn.Linear(input_dim, output_dim)

self.bn1 = nn.BatchNorm1d(output_dim)

self.ln1 = nn.LayerNorm(output_dim)

self.fc2 = nn.Linear(output_dim, output_dim)

self.bn2 = nn.BatchNorm1d(output_dim)

self.ln2 = nn.LayerNorm(output_dim)

self.act_fn = act_fn

self.dropout = nn.Dropout(dropout_prob)

self.shortcut = nn.Sequential()

if input_dim != output_dim:

self.shortcut = nn.Sequential(

nn.Linear(input_dim, output_dim),

nn.BatchNorm1d(output_dim)

)

def forward(self, x):

identity = self.shortcut(x)

out = self.fc1(x)

out = self.bn1(out)

out = self.ln1(out)

out = self.act_fn(out)

out = self.dropout(out)

out = self.fc2(out)

out = self.bn2(out)

out = self.ln2(out)

out += identity # Residual connection

out = self.act_fn(out)

return out

class MyResNet(L.LightningModule):

def __init__(

self,

l1: int,

epochs: int,

batch_size: int,

initialization: str,

act_fn: nn.Module,

optimizer: str,

dropout_prob: float,

lr_mult: float,

patience: int,

_L_in: int,

_L_out: int,

_torchmetric: str,

):

super().__init__()

self._L_in = _L_in

self._L_out = _L_out

if _torchmetric is None:

_torchmetric = "mean_squared_error"

self._torchmetric = _torchmetric

self.metric = getattr(torchmetrics.functional.regression, _torchmetric)

self.save_hyperparameters(ignore=["_L_in", "_L_out", "_torchmetric"])

self.example_input_array = torch.zeros((batch_size, self._L_in))

if self.hparams.l1 < 4:

raise ValueError("l1 must be at least 4")

# Get hidden sizes

hidden_sizes = self._get_hidden_sizes()

layer_sizes = [self._L_in] + hidden_sizes

# Construct the layers with Residual Blocks and Linear Layer at the end

layers = []

for i in range(len(layer_sizes) - 1):

layers.append(

ResidualBlock(

layer_sizes[i],

layer_sizes[i + 1],

self.hparams.act_fn,

self.hparams.dropout_prob

)

)

layers.append(nn.Linear(layer_sizes[-1], self._L_out))

self.layers = nn.Sequential(*layers)

# Initialization (Xavier, Kaiming, or Default)

self.apply(self._init_weights)

def _init_weights(self, module):

if isinstance(module, nn.Linear):

if self.hparams.initialization == "xavier_uniform":

nn.init.xavier_uniform_(module.weight)

elif self.hparams.initialization == "xavier_normal":

nn.init.xavier_normal_(module.weight)

elif self.hparams.initialization == "kaiming_uniform":

nn.init.kaiming_uniform_(module.weight)

elif self.hparams.initialization == "kaiming_normal":

nn.init.kaiming_normal_(module.weight)

else: # "Default"

nn.init.uniform_(module.weight)

if module.bias is not None:

nn.init.zeros_(module.bias)

def _generate_div2_list(self, n, n_min) -> list:

result = []

current = n

repeats = 1

max_repeats = 4

while current >= n_min:

result.extend([current] * min(repeats, max_repeats))

current = current // 2

repeats = repeats + 1

return result

def _get_hidden_sizes(self):

n_low = max(2, int(self._L_in / 4)) # Ensure minimum reasonable size

n_high = max(self.hparams.l1, 2 * n_low)

hidden_sizes = self._generate_div2_list(n_high, n_low)

return hidden_sizes

def forward(self, x: torch.Tensor) -> torch.Tensor:

x = self.layers(x)

return x

def _calculate_loss(self, batch):

x, y = batch

y = y.view(len(y), 1)

y_hat = self(x)

loss = self.metric(y_hat, y)

return loss

def training_step(self, batch: tuple) -> torch.Tensor:

val_loss = self._calculate_loss(batch)

return val_loss

def validation_step(self, batch: tuple, batch_idx: int, prog_bar: bool = False) -> torch.Tensor:

val_loss = self._calculate_loss(batch)

self.log("val_loss", val_loss, prog_bar=prog_bar)

self.log("hp_metric", val_loss, prog_bar=prog_bar)

return val_loss

def test_step(self, batch: tuple, batch_idx: int, prog_bar: bool = False) -> torch.Tensor:

val_loss = self._calculate_loss(batch)

self.log("val_loss", val_loss, prog_bar=prog_bar)

self.log("hp_metric", val_loss, prog_bar=prog_bar)

return val_loss

def predict_step(self, batch: tuple, batch_idx: int, prog_bar: bool = False) -> torch.Tensor:

x, y = batch

yhat = self(x)

y = y.view(len(y), 1)

yhat = yhat.view(len(yhat), 1)

return (x, y, yhat)

def configure_optimizers(self):

optimizer = optimizer_handler(

optimizer_name=self.hparams.optimizer,

params=self.parameters(),

lr_mult=self.hparams.lr_mult

)

# Dynamic creation of milestones based on the number of epochs.

num_milestones = 3 # Number of milestones to divide the epochs

milestones = [int(self.hparams.epochs / (num_milestones + 1) * (i + 1)) for i in range(num_milestones)]

# Print milestones for debug purposes

print(f"Milestones: {milestones}")

# Create MultiStepLR scheduler with dynamic milestones and learning rate multiplier.

scheduler = optim.lr_scheduler.MultiStepLR(

optimizer,

milestones=milestones,

gamma=0.1 # Decay factor

)

# Learning rate scheduler configuration

lr_scheduler_config = {

"scheduler": scheduler,

"interval": "epoch", # Adjust learning rate per epoch

"frequency": 1, # Apply the scheduler at every epoch

}

return {"optimizer": optimizer, "lr_scheduler": lr_scheduler_config}59.2.2 my_hyperdict.py

import json

from spotpython.data import base

import pathlib

class MyHyperDict(base.FileConfig):

"""User specified hyperparameter dictionary.

This class extends the FileConfig class to provide a dictionary for storing hyperparameters.

Attributes:

filename (str):

The name of the file where the hyperparameters are stored.

"""

def __init__(

self,

filename: str = "my_hyper_dict.json",

directory: None = None,

) -> None:

super().__init__(filename=filename, directory=directory)

self.filename = filename

self.directory = directory

self.hyper_dict = self.load()

@property

def path(self):

if self.directory:

return pathlib.Path(self.directory).joinpath(self.filename)

return pathlib.Path(__file__).parent.joinpath(self.filename)

def load(self) -> dict:

"""Load the hyperparameters from the file.

Returns:

dict: A dictionary containing the hyperparameters.

Examples:

# Assume the user specified file `my_hyper_dict.json` is in the `./hyperdict/` directory.

>>> user_lhd = MyHyperDict(filename='my_hyper_dict.json', directory='./hyperdict/')

"""

with open(self.path, "r") as f:

d = json.load(f)

return d59.2.3 my_hyperdict.json

"MyResNet": {

"l1": {

"type": "int",

"default": 3,

"transform": "transform_power_2_int",

"lower": 3,

"upper": 10

},

"epochs": {

"type": "int",

"default": 4,

"transform": "transform_power_2_int",

"lower": 4,

"upper": 9

},

"batch_size": {

"type": "int",

"default": 4,

"transform": "transform_power_2_int",

"lower": 1,

"upper": 6

},

"act_fn": {

"levels": [

"Sigmoid",

"Tanh",

"ReLU",

"LeakyReLU",

"ELU",

"Swish"

],

"type": "factor",

"default": "ReLU",

"transform": "None",

"class_name": "spotpython.torch.activation",

"core_model_parameter_type": "instance()",

"lower": 0,

"upper": 5

},

"optimizer": {

"levels": [

"Adadelta",

"Adagrad",

"Adam",

"AdamW",

"SparseAdam",

"Adamax",

"ASGD",

"NAdam",

"RAdam",

"RMSprop",

"Rprop",

"SGD"

],

"type": "factor",

"default": "SGD",

"transform": "None",

"class_name": "torch.optim",

"core_model_parameter_type": "str",

"lower": 0,

"upper": 11

},

"dropout_prob": {

"type": "float",

"default": 0.01,

"transform": "None",

"lower": 0.0,

"upper": 0.25

},

"lr_mult": {

"type": "float",

"default": 1.0,

"transform": "None",

"lower": 0.1,

"upper": 10.0

},

"patience": {

"type": "int",

"default": 2,

"transform": "transform_power_2_int",

"lower": 2,

"upper": 6

},

"initialization": {

"levels": [

"Default",

"kaiming_uniform",

"kaiming_normal",

"xavier_uniform",

"xavier_normal"

],

"type": "factor",

"default": "Default",

"transform": "None",

"core_model_parameter_type": "str",

"lower": 0,

"upper": 4

}

}59.3 Summary

This section presented an introduction to the basic setup of hyperparameter tuning with spotpython and PyTorch Lightning using a ResNet model for the Diabetes data set.