import numpy as np

from math import inf

from spotpython.fun.objectivefunctions import Analytical

from spotpython.spot import Spot

from scipy.optimize import shgo

from scipy.optimize import direct

from scipy.optimize import differential_evolution

from scipy.optimize import dual_annealing

from scipy.optimize import basinhopping

from spotpython.utils.init import fun_control_init, design_control_init, optimizer_control_init15 Sequential Parameter Optimization: Using scipy Optimizers

As a default optimizer, spotpython uses differential_evolution from the scipy.optimize package. Alternatively, any other optimizer from the scipy.optimize package can be used. This chapter describes how different optimizers from the scipy optimize package can be used on the surrogate. The optimization algorithms are available from https://docs.scipy.org/doc/scipy/reference/optimize.html

15.1 The Objective Function Branin

The spotpython package provides several classes of objective functions. We will use an analytical objective function, i.e., a function that can be described by a (closed) formula. Here we will use the Branin function. The 2-dim Branin function is \[

y = a (x_2 - b x_1^2 + c x_1 - r) ^2 + s (1 - t) \cos(x_1) + s,

\] where values of \(a\), \(b\), \(c\), \(r\), \(s\) and \(t\) are: \(a = 1\), \(b = 5.1 / (4\pi^2)\), \(c = 5 / \pi\), \(r = 6\), \(s = 10\) and \(t = 1 / (8\pi)\).

It has three global minima: \(f(x) = 0.397887\) at \((-\pi, 12.275)\), \((\pi, 2.275)\), and \((9.42478, 2.475)\).

Input Domain: This function is usually evaluated on the square \(x_1 \in [-5, 10] \times x_2 \in [0, 15]\).

from spotpython.fun.objectivefunctions import Analytical

lower = np.array([-5,-0])

upper = np.array([10,15])

fun = Analytical(seed=123).fun_branin15.2 The Optimizer

Differential Evolution (DE) from the scikit.optimize package, see https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.differential_evolution.html#scipy.optimize.differential_evolution is the default optimizer for the search on the surrogate. Other optimiers that are available in spotpython, see https://docs.scipy.org/doc/scipy/reference/optimize.html#global-optimization.

dual_annealingdirectshgobasinhopping

These optimizers can be selected as follows:

from scipy.optimize import differential_evolution

optimizer = differential_evolutionAs noted above, we will use differential_evolution. The optimizer can use 1000 evaluations. This value will be passed to the differential_evolution method, which has the argument maxiter (int). It defines the maximum number of generations over which the entire differential evolution population is evolved, see https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.differential_evolution.html#scipy.optimize.differential_evolution

Similar to the one-dimensional case, which is discussed in Section 12.25, we can use TensorBoard to monitor the progress of the optimization. We will use a similar code, only the prefix is different:

fun_control=fun_control_init(

lower = lower,

upper = upper,

fun_evals = 20,

PREFIX = "04_DE_"

)spot_de = Spot(fun=fun,

fun_control=fun_control)

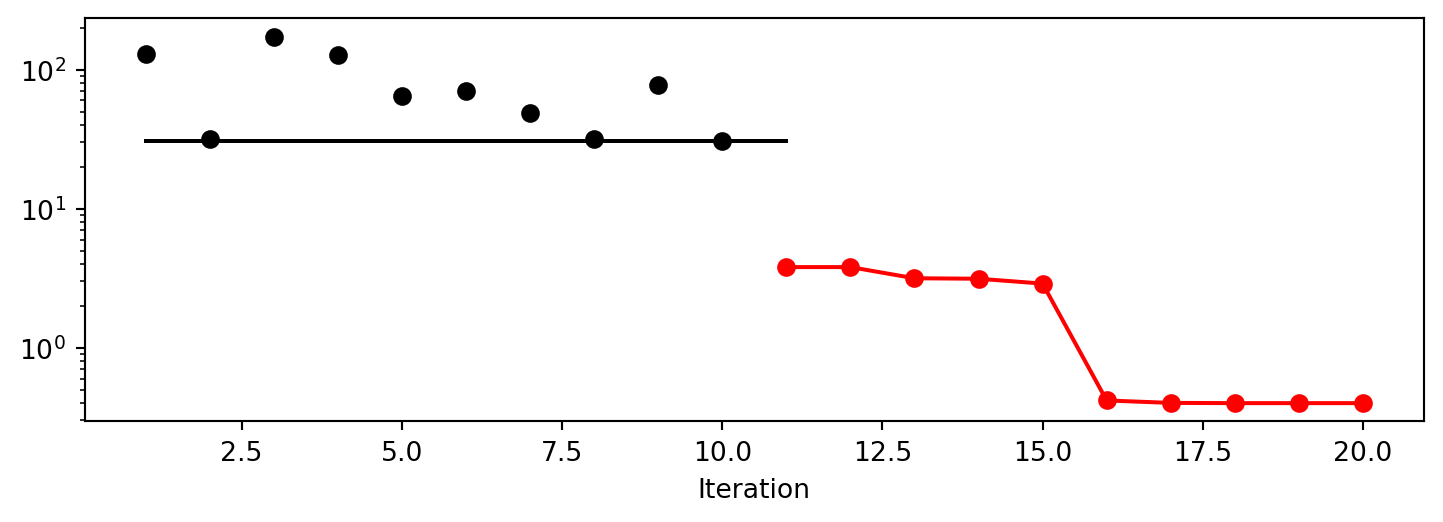

spot_de.run()spotpython tuning: 3.8004525569254524 [######----] 55.00%. Success rate: 100.00%

spotpython tuning: 3.8004525569254524 [######----] 60.00%. Success rate: 50.00%

spotpython tuning: 3.1644860748767325 [######----] 65.00%. Success rate: 66.67%

spotpython tuning: 3.1405013986990866 [#######---] 70.00%. Success rate: 75.00%

spotpython tuning: 3.0703215884465367 [########--] 75.00%. Success rate: 80.00%

spotpython tuning: 2.934924209020851 [########--] 80.00%. Success rate: 83.33%

spotpython tuning: 0.4523710119285411 [########--] 85.00%. Success rate: 85.71%

spotpython tuning: 0.4335054313511062 [#########-] 90.00%. Success rate: 87.50%

spotpython tuning: 0.39841709590731966 [##########] 95.00%. Success rate: 88.89%

spotpython tuning: 0.39796888979727996 [##########] 100.00%. Success rate: 90.00% Done...

Experiment saved to 04_DE__res.pkl<spotpython.spot.spot.Spot at 0x106e58050>15.2.1 TensorBoard

If the prefix argument in fun_control_init()is not None (as above, where the prefix was set to 04_DE_) , we can start TensorBoard in the background with the following command:

tensorboard --logdir="./runs"We can access the TensorBoard web server with the following URL:

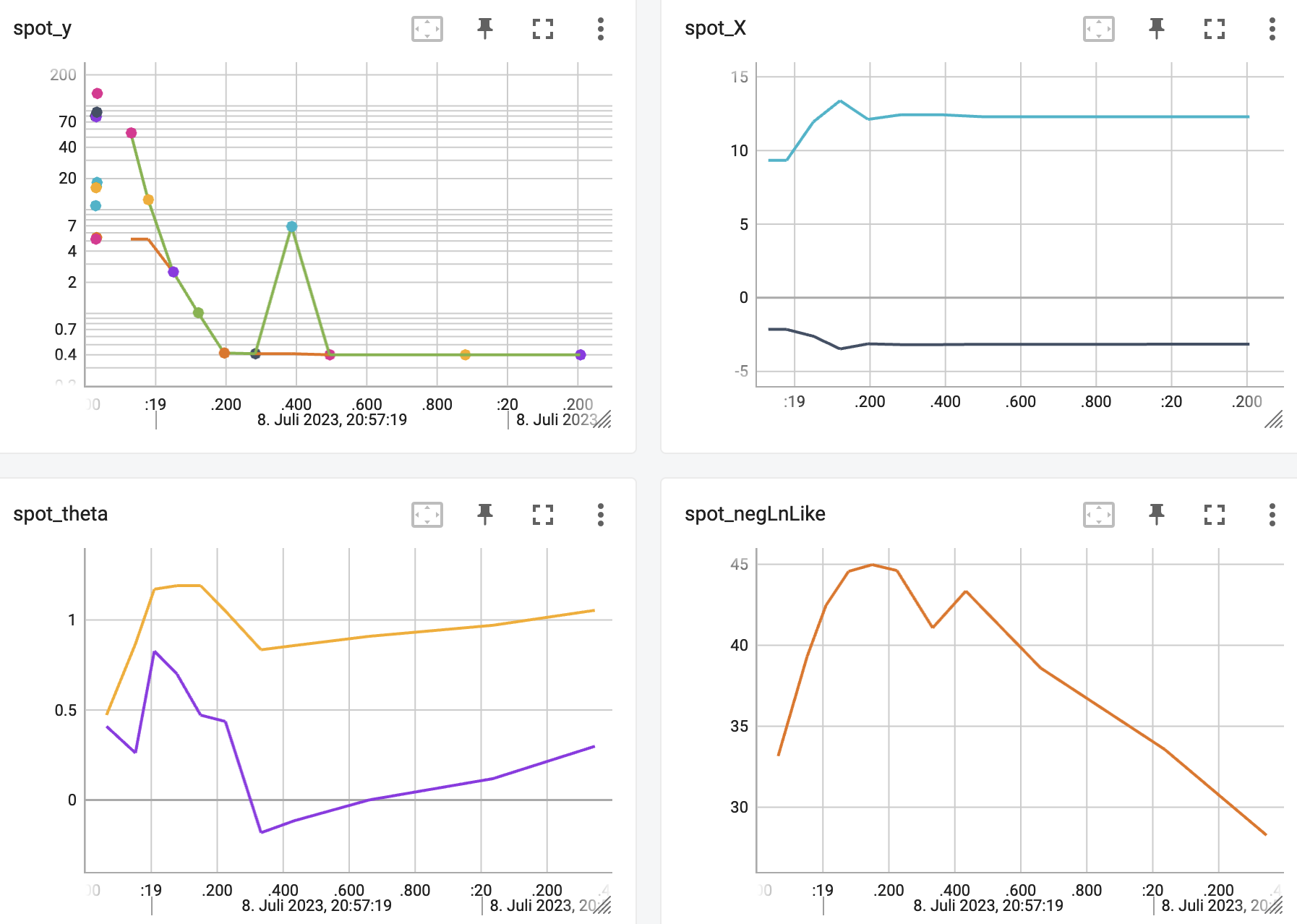

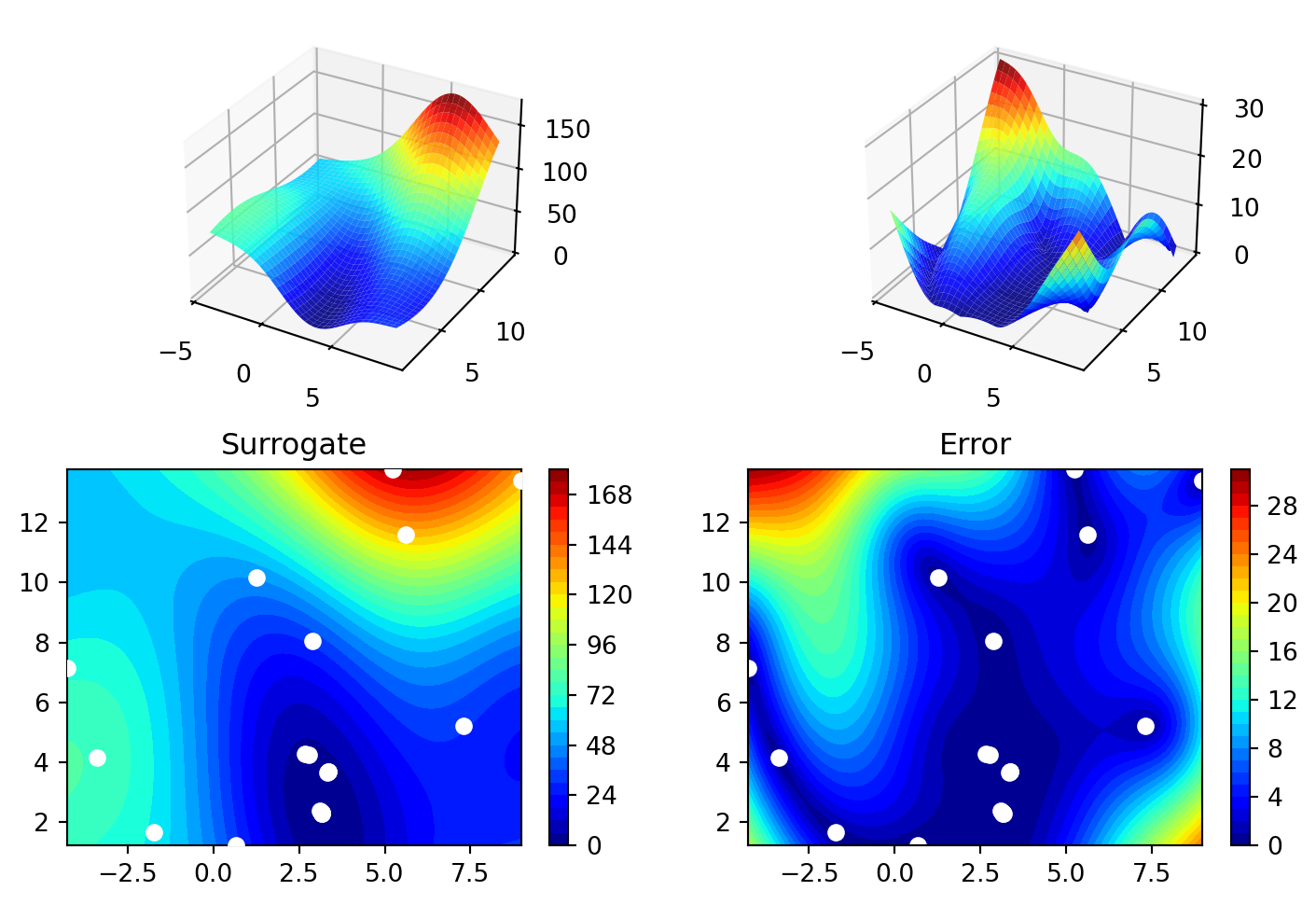

http://localhost:6006/The TensorBoard plot illustrates how spotpython can be used as a microscope for the internal mechanisms of the surrogate-based optimization process. Here, one important parameter, the learning rate \(\theta\) of the Kriging surrogate is plotted against the number of optimization steps.

15.3 Print the Results

spot_de.print_results()min y: 0.39796888979727996

x0: 3.140132672900477

x1: 2.26769501627711[['x0', np.float64(3.140132672900477)], ['x1', np.float64(2.26769501627711)]]15.4 Show the Progress

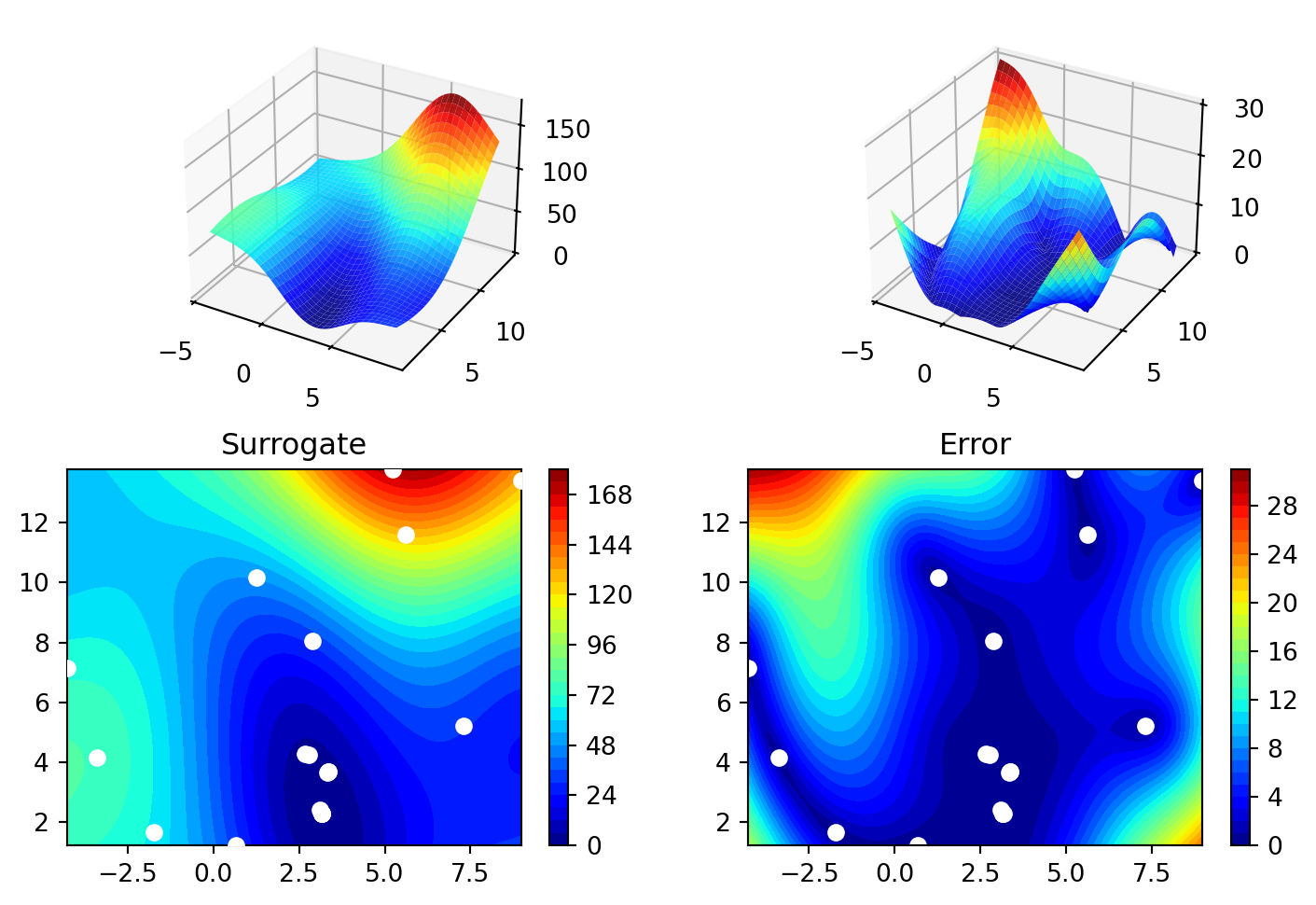

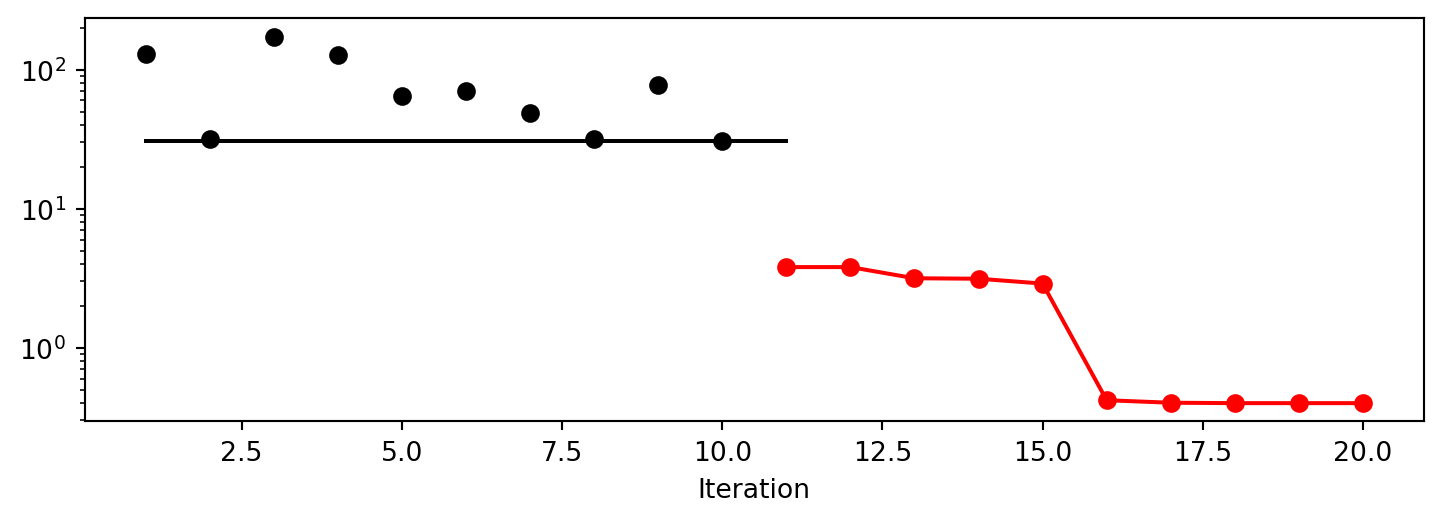

spot_de.plot_progress(log_y=True)

spot_de.surrogate.plot()

15.5 Exercises

15.5.1 dual_annealing

- Describe the optimization algorithm, see scipy.optimize.dual_annealing.

- Use the algorithm as an optimizer on the surrogate.

We can run spotpython with the dual_annealing optimizer as follows:

spot_da = Spot(fun=fun,

fun_control=fun_control,

optimizer=dual_annealing)

spot_da.run()

spot_da.print_results()

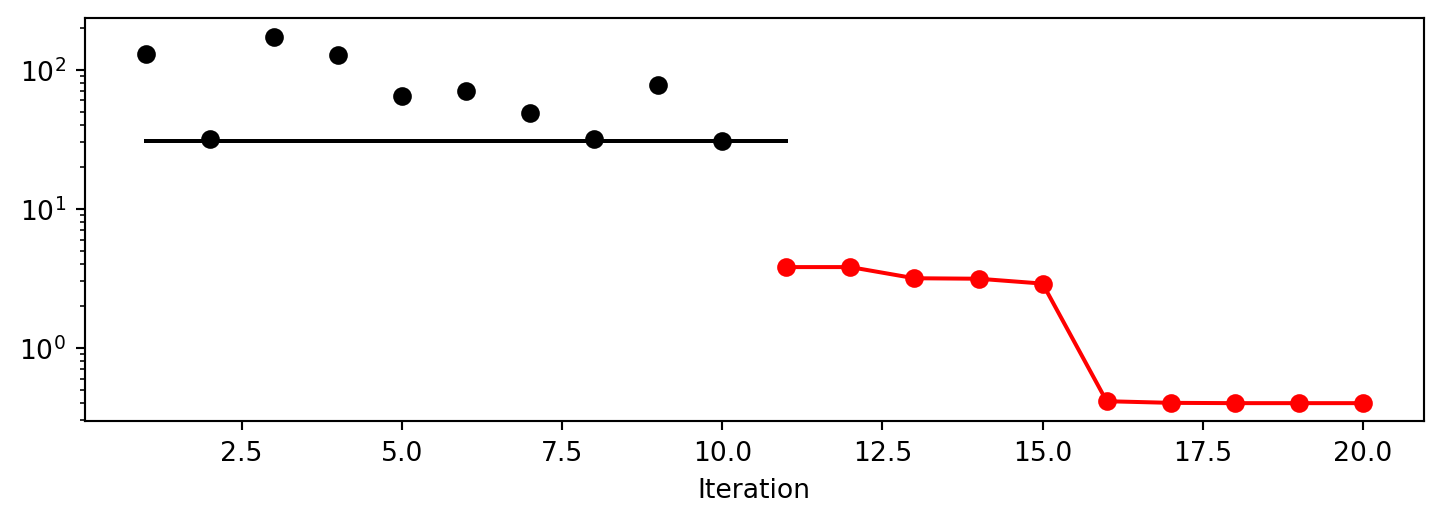

spot_da.plot_progress(log_y=True)

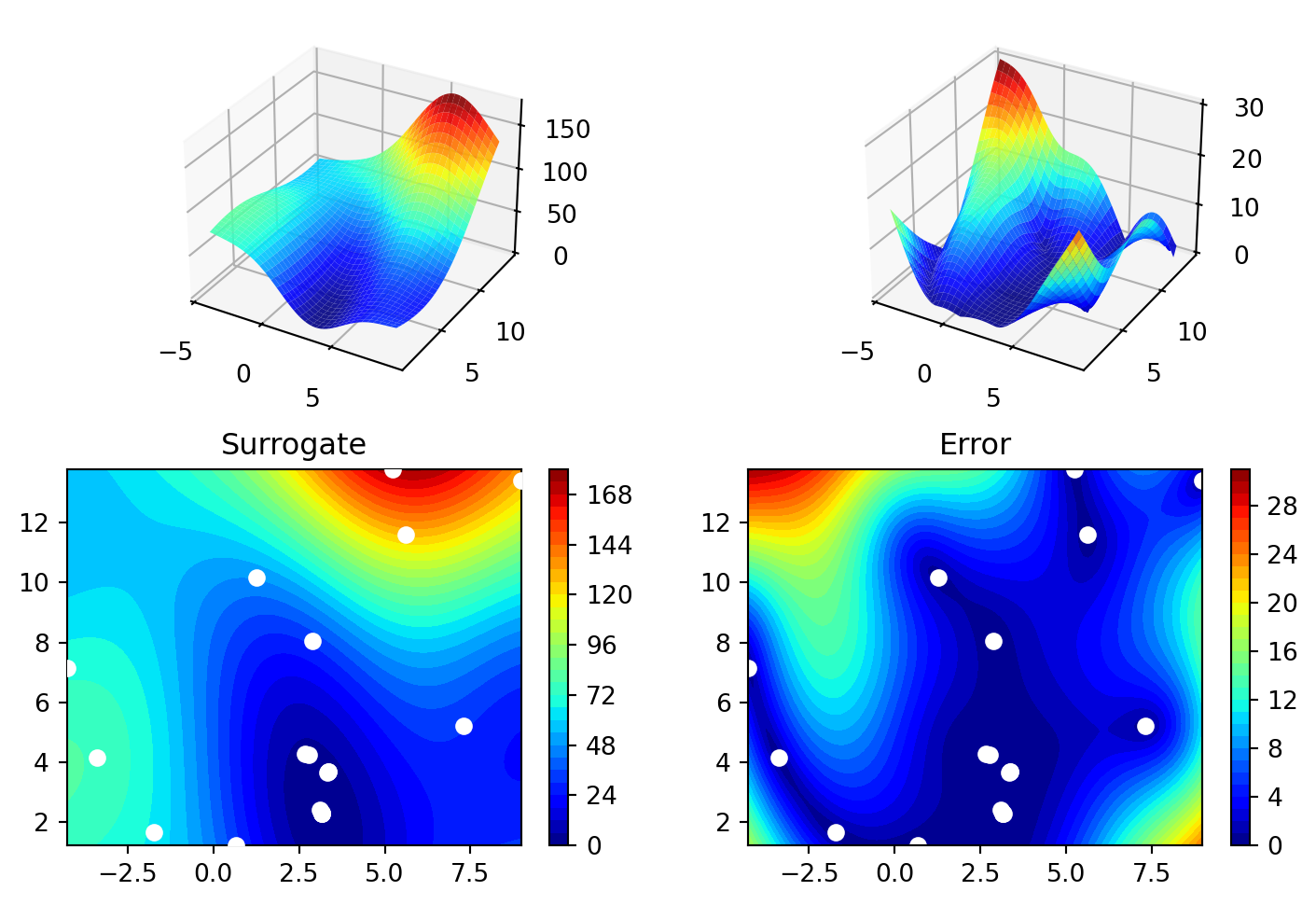

spot_da.surrogate.plot()spotpython tuning: 3.800454182091854 [######----] 55.00%. Success rate: 100.00%

spotpython tuning: 3.800454182091854 [######----] 60.00%. Success rate: 50.00%

spotpython tuning: 3.159404256311033 [######----] 65.00%. Success rate: 66.67%

spotpython tuning: 3.1344112546586977 [#######---] 70.00%. Success rate: 75.00%

spotpython tuning: 3.0492833293320416 [########--] 75.00%. Success rate: 80.00%

spotpython tuning: 2.9070231530180237 [########--] 80.00%. Success rate: 83.33%

spotpython tuning: 0.44694718755548735 [########--] 85.00%. Success rate: 85.71%

spotpython tuning: 0.4275929669767766 [#########-] 90.00%. Success rate: 87.50%

spotpython tuning: 0.3999639038471443 [##########] 95.00%. Success rate: 88.89%

spotpython tuning: 0.3981150855246529 [##########] 100.00%. Success rate: 90.00% Done...

Experiment saved to 04_DE__res.pkl

min y: 0.3981150855246529

x0: 3.135596675425474

x1: 2.2722562497899212

15.5.2 direct

- Describe the optimization algorithm

- Use the algorithm as an optimizer on the surrogate

We can run spotpython with the direct optimizer as follows:

spot_di = Spot(fun=fun,

fun_control=fun_control,

optimizer=direct)

spot_di.run()

spot_di.print_results()

spot_di.plot_progress(log_y=True)

spot_di.surrogate.plot()spotpython tuning: 3.808603529901438 [######----] 55.00%. Success rate: 100.00%

spotpython tuning: 3.808603529901438 [######----] 60.00%. Success rate: 50.00%

spotpython tuning: 3.19804562480188 [######----] 65.00%. Success rate: 66.67%

spotpython tuning: 3.17767194117126 [#######---] 70.00%. Success rate: 75.00%

spotpython tuning: 3.165751373773567 [########--] 75.00%. Success rate: 80.00%

spotpython tuning: 3.133265047041581 [########--] 80.00%. Success rate: 83.33%

spotpython tuning: 3.076588713032807 [########--] 85.00%. Success rate: 85.71%

spotpython tuning: 0.5028918360432506 [#########-] 90.00%. Success rate: 87.50%

spotpython tuning: 0.45130981703175976 [##########] 95.00%. Success rate: 88.89%

spotpython tuning: 0.3989943920885928 [##########] 100.00%. Success rate: 90.00% Done...

Experiment saved to 04_DE__res.pkl

min y: 0.3989943920885928

x0: 3.1264288980338364

x1: 2.28509373571102

15.5.3 shgo

- Describe the optimization algorithm

- Use the algorithm as an optimizer on the surrogate

We can run spotpython with the direct optimizer as follows:

spot_sh = Spot(fun=fun,

fun_control=fun_control,

optimizer=shgo)

spot_sh.run()

spot_sh.print_results()

spot_sh.plot_progress(log_y=True)

spot_sh.surrogate.plot()spotpython tuning: 3.8004550272025437 [######----] 55.00%. Success rate: 100.00%

spotpython tuning: 3.8004550272025437 [######----] 60.00%. Success rate: 50.00%

spotpython tuning: 3.1593634068529015 [######----] 65.00%. Success rate: 66.67%

spotpython tuning: 3.1343990956358345 [#######---] 70.00%. Success rate: 75.00%

spotpython tuning: 3.0512879835062634 [########--] 75.00%. Success rate: 80.00%

spotpython tuning: 2.904985437740331 [########--] 80.00%. Success rate: 83.33%

spotpython tuning: 0.4470562902385673 [########--] 85.00%. Success rate: 85.71%

spotpython tuning: 0.42244233288071875 [#########-] 90.00%. Success rate: 87.50%

spotpython tuning: 0.3999188404619609 [##########] 95.00%. Success rate: 88.89%

spotpython tuning: 0.3981002885577922 [##########] 100.00%. Success rate: 90.00% Done...

Experiment saved to 04_DE__res.pkl

min y: 0.3981002885577922

x0: 3.1357673421870387

x1: 2.272475458942683

15.5.4 basinhopping

- Describe the optimization algorithm

- Use the algorithm as an optimizer on the surrogate

We can run spotpython with the direct optimizer as follows:

spot_bh = Spot(fun=fun,

fun_control=fun_control,

optimizer=basinhopping)

spot_bh.run()

spot_bh.print_results()

spot_bh.plot_progress(log_y=True)

spot_bh.surrogate.plot()spotpython tuning: 3.8004406841429414 [######----] 55.00%. Success rate: 100.00%

spotpython tuning: 3.8004406841429414 [######----] 60.00%. Success rate: 50.00%

spotpython tuning: 3.1591903142692317 [######----] 65.00%. Success rate: 66.67%

spotpython tuning: 3.128117693244988 [#######---] 70.00%. Success rate: 75.00%

spotpython tuning: 3.0449391022276853 [########--] 75.00%. Success rate: 80.00%

spotpython tuning: 2.9085008044527525 [########--] 80.00%. Success rate: 83.33%

spotpython tuning: 0.44636813870454084 [########--] 85.00%. Success rate: 85.71%

spotpython tuning: 0.4203418629420792 [#########-] 90.00%. Success rate: 87.50%

spotpython tuning: 0.3999877661836013 [##########] 95.00%. Success rate: 88.89%

spotpython tuning: 0.39825689415986787 [##########] 100.00%. Success rate: 90.00% Done...

Experiment saved to 04_DE__res.pkl

min y: 0.39825689415986787

x0: 3.133951975326067

x1: 2.271518851806762

15.5.5 Performance Comparison

Compare the performance and run time of the 5 different optimizers:

differential_evolutiondual_annealingdirectshgobasinhopping.

The Branin function has three global minima:

- \(f(x) = 0.397887\) at

- \((-\pi, 12.275)\),

- \((\pi, 2.275)\), and

- \((9.42478, 2.475)\).

- Which optima are found by the optimizers?

- Does the

seedargument infun = Analytical(seed=123).fun_braninchange this behavior?

15.6 Jupyter Notebook

- The Jupyter-Notebook of this chapter is available on GitHub in the Hyperparameter-Tuning-Cookbook Repository