import numpy as np

from math import inf

from spotpython.fun.objectivefunctions import Analytical

from spotpython.spot import Spot

from scipy.optimize import shgo

from scipy.optimize import direct

from scipy.optimize import differential_evolution

import matplotlib.pyplot as pltAppendix D — Documentation of the Sequential Parameter Optimization

This document describes the Spot features. The official spotpython documentation can be found here: https://sequential-parameter-optimization.github.io/spotpython/.

D.1 An Initial Example

The spotpython package provides several classes of objective functions. We will use an analytical objective function, i.e., a function that can be described by a (closed) formula: \[

f(x) = x^2.

\]

fun = Analytical().fun_spherex = np.linspace(-1,1,100).reshape(-1,1)

y = fun(x)

plt.figure()

plt.plot(x,y, "k")

plt.show()

from spotpython.utils.init import fun_control_init, design_control_init, surrogate_control_init, optimizer_control_init

spot_1 = Spot(fun=fun,

fun_control=fun_control_init(

lower = np.array([-10]),

upper = np.array([100]),

fun_evals = 7,

fun_repeats = 1,

max_time = inf,

noise = False,

tolerance_x = np.sqrt(np.spacing(1)),

var_type=["num"],

infill_criterion = "y",

n_points = 1,

seed=123,

log_level = 50),

design_control=design_control_init(

init_size=5,

repeats=1),

surrogate_control=surrogate_control_init(

method="interpolation",

min_theta=-4,

max_theta=3,

n_theta=1,

model_optimizer=differential_evolution,

model_fun_evals=10000))

spot_1.run()spotpython tuning: 51.152288363788145 [#########-] 85.71%

spotpython tuning: 21.640274267756638 [##########] 100.00% Done...

Experiment saved to 000_res.pkl<spotpython.spot.spot.Spot at 0x1097e4440>D.2 Organization

Spot organizes the surrogate based optimization process in four steps:

- Selection of the objective function:

fun. - Selection of the initial design:

design. - Selection of the optimization algorithm:

optimizer. - Selection of the surrogate model:

surrogate.

For each of these steps, the user can specify an object:

from spotpython.fun.objectivefunctions import Analytical

fun = Analytical().fun_sphere

from spotpython.design.spacefilling import SpaceFilling

design = SpaceFilling(2)

from scipy.optimize import differential_evolution

optimizer = differential_evolution

from spotpython.surrogate.kriging import Kriging

surrogate = Kriging()For each of these steps, the user can specify a dictionary of control parameters.

fun_controldesign_controloptimizer_controlsurrogate_control

Each of these dictionaries has an initialzaion method, e.g., fun_control_init(). The initialization methods set the default values for the control parameters.

- The specification of an lower bound in

fun_controlis mandatory.

from spotpython.utils.init import fun_control_init, design_control_init, optimizer_control_init, surrogate_control_init

fun_control=fun_control_init(lower=np.array([-1, -1]),

upper=np.array([1, 1]))

design_control=design_control_init()

optimizer_control=optimizer_control_init()

surrogate_control=surrogate_control_init()D.3 The Spot Object

Based on the definition of the fun, design, optimizer, and surrogate objects, and their corresponding control parameter dictionaries, fun_control, design_control, optimizer_control, and surrogate_control, the spot object can be build as follows:

from spotpython.spot import Spot

spot_tuner = Spot(fun=fun,

fun_control=fun_control,

design_control=design_control,

optimizer_control=optimizer_control,

surrogate_control=surrogate_control)D.4 Run

spot_tuner.run()spotpython tuning: 0.0013050614212698486 [#######---] 73.33%

spotpython tuning: 0.0003479187873901382 [########--] 80.00%

spotpython tuning: 0.00022767416623665655 [#########-] 86.67%

spotpython tuning: 0.00020787497784734184 [#########-] 93.33%

spotpython tuning: 0.00020393508736265477 [##########] 100.00% Done...

Experiment saved to 000_res.pkl<spotpython.spot.spot.Spot at 0x1601bbad0>D.5 Print the Results

spot_tuner.print_results()min y: 0.00020393508736265477

x0: 0.014161858193549292

x1: 0.0018376234294478113[['x0', np.float64(0.014161858193549292)],

['x1', np.float64(0.0018376234294478113)]]D.6 Show the Progress

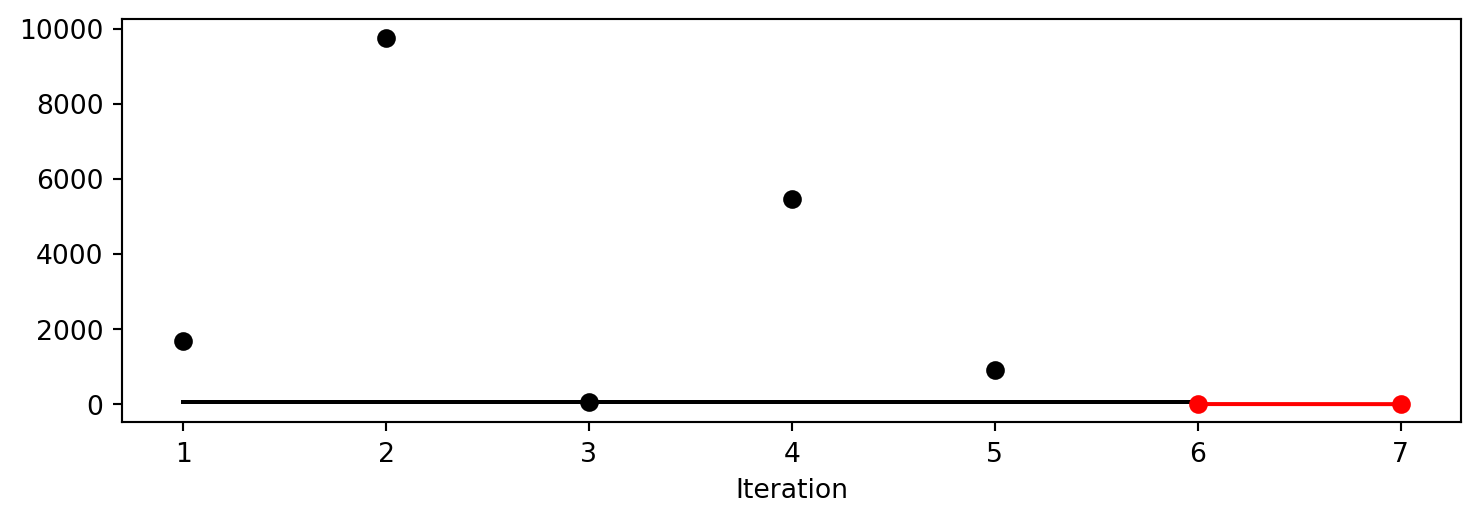

spot_tuner.plot_progress()

D.7 Visualize the Surrogate

- The plot method of the

krigingsurrogate is used. - Note: the plot uses the interval defined by the ranges of the natural variables.

spot_tuner.surrogate.plot()

D.8 Run With a Specific Start Design

To pass a specific start design, use the X_start argument of the run method.

spot_x0 = Spot(fun=fun,

fun_control=fun_control_init(

lower = np.array([-10]),

upper = np.array([100]),

fun_evals = 7,

fun_repeats = 1,

max_time = inf,

noise = False,

tolerance_x = np.sqrt(np.spacing(1)),

var_type=["num"],

infill_criterion = "y",

n_points = 1,

seed=123,

log_level = 50),

design_control=design_control_init(

init_size=5,

repeats=1),

surrogate_control=surrogate_control_init(

method="interpolation",

min_theta=-4,

max_theta=3,

n_theta=1,

model_optimizer=differential_evolution,

model_fun_evals=10000))

spot_x0.run(X_start=np.array([0.5, -0.5]))

spot_x0.plot_progress()spotpython tuning: 51.152288363788145 [#########-] 85.71%

spotpython tuning: 21.640274267756638 [##########] 100.00% Done...

Experiment saved to 000_res.pkl

D.9 Init: Build Initial Design

from spotpython.design.spacefilling import SpaceFilling

from spotpython.surrogate.kriging import Kriging

from spotpython.fun.objectivefunctions import Analytical

from spotpython.utils.init import fun_control_init

gen = SpaceFilling(2)

rng = np.random.RandomState(1)

lower = np.array([-5,-0])

upper = np.array([10,15])

fun = Analytical().fun_branin

fun_control = fun_control_init(sigma=0)

X = gen.scipy_lhd(10, lower=lower, upper = upper)

print(X)

y = fun(X, fun_control=fun_control)

print(y)[[ 8.97647221 13.41926847]

[ 0.66946019 1.22344228]

[ 5.23614115 13.78185824]

[ 5.6149825 11.5851384 ]

[-1.72963184 1.66516096]

[-4.26945568 7.1325531 ]

[ 1.26363761 10.17935555]

[ 2.88779942 8.05508969]

[-3.39111089 4.15213772]

[ 7.30131231 5.22275244]]

[128.95676449 31.73474356 172.89678121 126.71295908 64.34349975

70.16178611 48.71407916 31.77322887 76.91788181 30.69410529]D.10 Replicability

Seed

gen = SpaceFilling(2, seed=123)

X0 = gen.scipy_lhd(3)

gen = SpaceFilling(2, seed=345)

X1 = gen.scipy_lhd(3)

X2 = gen.scipy_lhd(3)

gen = SpaceFilling(2, seed=123)

X3 = gen.scipy_lhd(3)

X0, X1, X2, X3(array([[0.77254938, 0.31539299],

[0.59321338, 0.93854273],

[0.27469803, 0.3959685 ]]),

array([[0.78373509, 0.86811887],

[0.06692621, 0.6058029 ],

[0.41374778, 0.00525456]]),

array([[0.121357 , 0.69043832],

[0.41906219, 0.32838498],

[0.86742658, 0.52910374]]),

array([[0.77254938, 0.31539299],

[0.59321338, 0.93854273],

[0.27469803, 0.3959685 ]]))D.11 Surrogates

D.11.1 A Simple Predictor

The code below shows how to use a simple model for prediction. Assume that only two (very costly) measurements are available:

- f(0) = 0.5

- f(2) = 2.5

We are interested in the value at \(x_0 = 1\), i.e., \(f(x_0 = 1)\), but cannot run an additional, third experiment.

from sklearn import linear_model

X = np.array([[0], [2]])

y = np.array([0.5, 2.5])

S_lm = linear_model.LinearRegression()

S_lm = S_lm.fit(X, y)

X0 = np.array([[1]])

y0 = S_lm.predict(X0)

print(y0)[1.5]Central Idea: Evaluation of the surrogate model S_lm is much cheaper (or / and much faster) than running the real-world experiment \(f\).

D.12 Tensorboard Setup

D.12.1 Tensorboard Configuration

The TENSORBOARD_CLEAN argument can be set to True in the fun_control dictionary to archive the TensorBoard folder if it already exists. This is useful if you want to start a hyperparameter tuning process from scratch. If you want to continue a hyperparameter tuning process, set TENSORBOARD_CLEAN to False. Then the TensorBoard folder will not be archived and the old and new TensorBoard files will shown in the TensorBoard dashboard.

D.12.2 Starting TensorBoard

TensorBoard can be started as a background process with the following command, where ./runs is the default directory for the TensorBoard log files:

tensorboard --logdir="./runs"The TensorBoard path can be printed with the following command (after a fun_control object has been created):

from spotpython.utils.init import get_tensorboard_path

get_tensorboard_path(fun_control)D.13 Demo/Test: Objective Function Fails

SPOT expects np.nan values from failed objective function values. These are handled. Note: SPOT’s counter considers only successful executions of the objective function.

import numpy as np

from spotpython.fun.objectivefunctions import Analytical

from spotpython.spot import Spot

import numpy as np

from math import inf

# number of initial points:

ni = 20

# number of points

n = 30

fun = Analytical().fun_random_error

fun_control=fun_control_init(

lower = np.array([-1]),

upper= np.array([1]),

fun_evals = n,

show_progress=False)

design_control=design_control_init(init_size=ni)

spot_1 = Spot(fun=fun,

fun_control=fun_control,

design_control=design_control)

# assert value error from the run method

try:

spot_1.run()

except ValueError as e:

print(e)Experiment saved to 000_res.pklD.14 Handling Results: Printing, Saving, and Loading

The results can be printed with the following command:

spot_tuner.print_results(print_screen=False)The tuned hyperparameters can be obtained as a dictionary with the following command:

from spotpython.hyperparameters.values import get_tuned_hyperparameters

get_tuned_hyperparameters(spot_tuner, fun_control)The results can be saved and reloaded with the following commands:

from spotpython.utils.file import save_pickle, load_pickle

from spotpython.utils.init import get_experiment_name

experiment_name = get_experiment_name("024")

SAVE_AND_LOAD = False

if SAVE_AND_LOAD == True:

save_pickle(spot_tuner, experiment_name)

spot_tuner = load_pickle(experiment_name)D.15 spotpython as a Hyperparameter Tuner

D.15.1 Modifying Hyperparameter Levels

spotpython distinguishes between different types of hyperparameters. The following types are supported:

int(integer)float(floating point number)boolean(boolean)factor(categorical)

D.15.1.1 Integer Hyperparameters

Integer hyperparameters can be modified with the set_int_hyperparameter_values() [SOURCE] function. The following code snippet shows how to modify the n_estimators hyperparameter of a random forest model:

from spotriver.hyperdict.river_hyper_dict import RiverHyperDict

from spotpython.utils.init import fun_control_init

from spotpython.hyperparameters.values import set_int_hyperparameter_values

from spotpython.utils.eda import print_exp_table

fun_control = fun_control_init(

core_model_name="forest.AMFRegressor",

hyperdict=RiverHyperDict,

)

print("Before modification:")

print_exp_table(fun_control)

set_int_hyperparameter_values(fun_control, "n_estimators", 2, 5)

print("After modification:")

print_exp_table(fun_control)Before modification:

| name | type | default | lower | upper | transform |

|-----------------|--------|-----------|---------|---------|-----------------------|

| n_estimators | int | 3 | 2 | 5 | transform_power_2_int |

| step | float | 1 | 0.1 | 10 | None |

| use_aggregation | factor | 1 | 0 | 1 | None |

Setting hyperparameter n_estimators to value [2, 5].

Variable type is int.

Core type is None.

Calling modify_hyper_parameter_bounds().

After modification:

| name | type | default | lower | upper | transform |

|-----------------|--------|-----------|---------|---------|-----------------------|

| n_estimators | int | 3 | 2 | 5 | transform_power_2_int |

| step | float | 1 | 0.1 | 10 | None |

| use_aggregation | factor | 1 | 0 | 1 | None |D.15.1.2 Float Hyperparameters

Float hyperparameters can be modified with the set_float_hyperparameter_values() [SOURCE] function. The following code snippet shows how to modify the step hyperparameter of a hyperparameter of a Mondrian Regression Tree model:

from spotriver.hyperdict.river_hyper_dict import RiverHyperDict

from spotpython.utils.init import fun_control_init

from spotpython.hyperparameters.values import set_float_hyperparameter_values

from spotpython.utils.eda import print_exp_table

fun_control = fun_control_init(

core_model_name="forest.AMFRegressor",

hyperdict=RiverHyperDict,

)

print("Before modification:")

print_exp_table(fun_control)

set_float_hyperparameter_values(fun_control, "step", 0.2, 5)

print("After modification:")

print_exp_table(fun_control)Before modification:

| name | type | default | lower | upper | transform |

|-----------------|--------|-----------|---------|---------|-----------------------|

| n_estimators | int | 3 | 2 | 5 | transform_power_2_int |

| step | float | 1 | 0.1 | 10 | None |

| use_aggregation | factor | 1 | 0 | 1 | None |

Setting hyperparameter step to value [0.2, 5].

Variable type is float.

Core type is None.

Calling modify_hyper_parameter_bounds().

After modification:

| name | type | default | lower | upper | transform |

|-----------------|--------|-----------|---------|---------|-----------------------|

| n_estimators | int | 3 | 2 | 5 | transform_power_2_int |

| step | float | 1 | 0.2 | 5 | None |

| use_aggregation | factor | 1 | 0 | 1 | None |D.15.1.3 Boolean Hyperparameters

Boolean hyperparameters can be modified with the set_boolean_hyperparameter_values() [SOURCE] function. The following code snippet shows how to modify the use_aggregation hyperparameter of a Mondrian Regression Tree model:

from spotriver.hyperdict.river_hyper_dict import RiverHyperDict

from spotpython.utils.init import fun_control_init

from spotpython.hyperparameters.values import set_boolean_hyperparameter_values

from spotpython.utils.eda import print_exp_table

fun_control = fun_control_init(

core_model_name="forest.AMFRegressor",

hyperdict=RiverHyperDict,

)

print("Before modification:")

print_exp_table(fun_control)

set_boolean_hyperparameter_values(fun_control, "use_aggregation", 0, 0)

print("After modification:")

print_exp_table(fun_control)Before modification:

| name | type | default | lower | upper | transform |

|-----------------|--------|-----------|---------|---------|-----------------------|

| n_estimators | int | 3 | 2 | 5 | transform_power_2_int |

| step | float | 1 | 0.1 | 10 | None |

| use_aggregation | factor | 1 | 0 | 1 | None |

Setting hyperparameter use_aggregation to value [0, 0].

Variable type is factor.

Core type is bool.

Calling modify_boolean_hyper_parameter_levels().

After modification:

| name | type | default | lower | upper | transform |

|-----------------|--------|-----------|---------|---------|-----------------------|

| n_estimators | int | 3 | 2 | 5 | transform_power_2_int |

| step | float | 1 | 0.1 | 10 | None |

| use_aggregation | factor | 1 | 0 | 0 | None |D.15.1.4 Factor Hyperparameters

Factor hyperparameters can be modified with the set_factor_hyperparameter_values() [SOURCE] function. The following code snippet shows how to modify the leaf_model hyperparameter of a Hoeffding Tree Regressor model:

from spotriver.hyperdict.river_hyper_dict import RiverHyperDict

from spotpython.utils.init import fun_control_init

from spotpython.hyperparameters.values import set_factor_hyperparameter_values

from spotpython.utils.eda import print_exp_table

fun_control = fun_control_init(

core_model_name="tree.HoeffdingTreeRegressor",

hyperdict=RiverHyperDict,

)

print("Before modification:")

print_exp_table(fun_control)

set_factor_hyperparameter_values(fun_control, "leaf_model", ['LinearRegression',

'Perceptron'])

print("After modification:")Before modification:

| name | type | default | lower | upper | transform |

|------------------------|--------|------------------|---------|----------|------------------------|

| grace_period | int | 200 | 10 | 1000 | None |

| max_depth | int | 20 | 2 | 20 | transform_power_2_int |

| delta | float | 1e-07 | 1e-08 | 1e-06 | None |

| tau | float | 0.05 | 0.01 | 0.1 | None |

| leaf_prediction | factor | mean | 0 | 2 | None |

| leaf_model | factor | LinearRegression | 0 | 2 | None |

| model_selector_decay | float | 0.95 | 0.9 | 0.99 | None |

| splitter | factor | EBSTSplitter | 0 | 2 | None |

| min_samples_split | int | 5 | 2 | 10 | None |

| binary_split | factor | 0 | 0 | 1 | None |

| max_size | float | 500.0 | 100 | 1000 | None |

| memory_estimate_period | int | 6 | 3 | 8 | transform_power_10_int |

| stop_mem_management | factor | 0 | 0 | 1 | None |

| remove_poor_attrs | factor | 0 | 0 | 1 | None |

| merit_preprune | factor | 1 | 0 | 1 | None |

After modification: