MAX_TIME = 1

INIT_SIZE = 10

PREFIX = "10"17 HPT: sklearn SVC on Moons Data

This chapter is a tutorial for the Hyperparameter Tuning (HPT) of a sklearn SVC model on the Moons dataset.

17.1 Step 1: Setup

Before we consider the detailed experimental setup, we select the parameters that affect run time, initial design size and the device that is used.

- MAX_TIME is set to one minute for demonstration purposes. For real experiments, this should be increased to at least 1 hour.

- INIT_SIZE is set to 5 for demonstration purposes. For real experiments, this should be increased to at least 10.

17.2 Step 2: Initialization of the Empty fun_control Dictionary

spotPython supports the visualization of the hyperparameter tuning process with TensorBoard. The following example shows how to use TensorBoard with spotPython. The fun_control dictionary is the central data structure that is used to control the optimization process. It is initialized as follows:

from spotPython.utils.init import fun_control_init

from spotPython.hyperparameters.values import set_control_key_value

from spotPython.utils.eda import gen_design_table

fun_control = fun_control_init(

PREFIX=PREFIX,

TENSORBOARD_CLEAN=True,

max_time=MAX_TIME,

fun_evals=inf,

tolerance_x = np.sqrt(np.spacing(1)))Moving TENSORBOARD_PATH: runs/ to TENSORBOARD_PATH_OLD: runs_OLD/runs_2024_04_22_00_28_38

Created spot_tensorboard_path: runs/spot_logs/10_maans14_2024-04-22_00-28-38 for SummaryWriter()- Since the

spot_tensorboard_pathargument is notNone, which is the default,spotPythonwill log the optimization process in the TensorBoard folder. - The

TENSORBOARD_CLEANargument is set toTrueto archive the TensorBoard folder if it already exists. This is useful if you want to start a hyperparameter tuning process from scratch. If you want to continue a hyperparameter tuning process, setTENSORBOARD_CLEANtoFalse. Then the TensorBoard folder will not be archived and the old and new TensorBoard files will shown in the TensorBoard dashboard.

17.3 Step 3: SKlearn Load Data (Classification)

Randomly generate classification data.

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.datasets import make_moons, make_circles, make_classification

n_features = 2

n_samples = 500

target_column = "y"

ds = make_moons(n_samples, noise=0.5, random_state=0)

X, y = ds

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=42

)

train = pd.DataFrame(np.hstack((X_train, y_train.reshape(-1, 1))))

test = pd.DataFrame(np.hstack((X_test, y_test.reshape(-1, 1))))

train.columns = [f"x{i}" for i in range(1, n_features+1)] + [target_column]

test.columns = [f"x{i}" for i in range(1, n_features+1)] + [target_column]

train.head()| x1 | x2 | y | |

|---|---|---|---|

| 0 | 1.960101 | 0.383172 | 0.0 |

| 1 | 2.354420 | -0.536942 | 1.0 |

| 2 | 1.682186 | -0.332108 | 0.0 |

| 3 | 1.856507 | 0.687220 | 1.0 |

| 4 | 1.925524 | 0.427413 | 1.0 |

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

x_min, x_max = X[:, 0].min() - 0.5, X[:, 0].max() + 0.5

y_min, y_max = X[:, 1].min() - 0.5, X[:, 1].max() + 0.5

cm = plt.cm.RdBu

cm_bright = ListedColormap(["#FF0000", "#0000FF"])

ax = plt.subplot(1, 1, 1)

ax.set_title("Input data")

# Plot the training points

ax.scatter(X_train[:, 0], X_train[:, 1], c=y_train, cmap=cm_bright, edgecolors="k")

# Plot the testing points

ax.scatter(

X_test[:, 0], X_test[:, 1], c=y_test, cmap=cm_bright, alpha=0.6, edgecolors="k"

)

ax.set_xlim(x_min, x_max)

ax.set_ylim(y_min, y_max)

ax.set_xticks(())

ax.set_yticks(())

plt.tight_layout()

plt.show()

n_samples = len(train)

# add the dataset to the fun_control

fun_control.update({"data": None, # dataset,

"train": train,

"test": test,

"n_samples": n_samples,

"target_column": target_column})17.4 Step 4: Specification of the Preprocessing Model

Data preprocesssing can be very simple, e.g., you can ignore it. Then you would choose the prep_model “None”:

prep_model = None

fun_control.update({"prep_model": prep_model})A default approach for numerical data is the StandardScaler (mean 0, variance 1). This can be selected as follows:

from sklearn.preprocessing import StandardScaler

prep_model = StandardScaler()

fun_control.update({"prep_model": prep_model})Even more complicated pre-processing steps are possible, e.g., the follwing pipeline:

categorical_columns = []

one_hot_encoder = OneHotEncoder(handle_unknown="ignore", sparse_output=False)

prep_model = ColumnTransformer(

transformers=[

("categorical", one_hot_encoder, categorical_columns),

],

remainder=StandardScaler(),

)17.5 Step 5: Select Model (algorithm) and core_model_hyper_dict

The selection of the algorithm (ML model) that should be tuned is done by specifying the its name from the sklearn implementation. For example, the SVC support vector machine classifier is selected as follows:

from spotPython.hyperparameters.values import add_core_model_to_fun_control

from spotPython.hyperdict.sklearn_hyper_dict import SklearnHyperDict

from sklearn.svm import SVC

add_core_model_to_fun_control(core_model=SVC,

fun_control=fun_control,

hyper_dict=SklearnHyperDict,

filename=None)Now fun_control has the information from the JSON file. The corresponding entries for the core_model class are shown below.

fun_control['core_model_hyper_dict']{'C': {'type': 'float',

'default': 1.0,

'transform': 'None',

'lower': 0.1,

'upper': 10.0},

'kernel': {'levels': ['linear', 'poly', 'rbf', 'sigmoid'],

'type': 'factor',

'default': 'rbf',

'transform': 'None',

'core_model_parameter_type': 'str',

'lower': 0,

'upper': 3},

'degree': {'type': 'int',

'default': 3,

'transform': 'None',

'lower': 3,

'upper': 3},

'gamma': {'levels': ['scale', 'auto'],

'type': 'factor',

'default': 'scale',

'transform': 'None',

'core_model_parameter_type': 'str',

'lower': 0,

'upper': 1},

'coef0': {'type': 'float',

'default': 0.0,

'transform': 'None',

'lower': 0.0,

'upper': 0.0},

'shrinking': {'levels': [0, 1],

'type': 'factor',

'default': 0,

'transform': 'None',

'core_model_parameter_type': 'bool',

'lower': 0,

'upper': 1},

'probability': {'levels': [0, 1],

'type': 'factor',

'default': 0,

'transform': 'None',

'core_model_parameter_type': 'bool',

'lower': 0,

'upper': 1},

'tol': {'type': 'float',

'default': 0.001,

'transform': 'None',

'lower': 0.0001,

'upper': 0.01},

'cache_size': {'type': 'float',

'default': 200,

'transform': 'None',

'lower': 100,

'upper': 400},

'break_ties': {'levels': [0, 1],

'type': 'factor',

'default': 0,

'transform': 'None',

'core_model_parameter_type': 'bool',

'lower': 0,

'upper': 1}}sklearn Model Selection

The following sklearn models are supported by default:

- RidgeCV

- RandomForestClassifier

- SVC

- LogisticRegression

- KNeighborsClassifier

- GradientBoostingClassifier

- GradientBoostingRegressor

- ElasticNet

They can be imported as follows:

from sklearn.linear_model import RidgeCV

from sklearn.ensemble import RandomForestClassifier

from sklearn.svm import SVC

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.linear_model import ElasticNet17.6 Step 6: Modify hyper_dict Hyperparameters for the Selected Algorithm aka core_model

spotPython provides functions for modifying the hyperparameters, their bounds and factors as well as for activating and de-activating hyperparameters without re-compilation of the Python source code. These functions were described in ?sec-modification-of-hyperparameters-14.

17.6.1 Modify hyperparameter of type numeric and integer (boolean)

Numeric and boolean values can be modified using the modify_hyper_parameter_bounds method.

sklearn Model Hyperparameters

The hyperparameters of the sklearn SVC model are described in the sklearn documentation.

- For example, to change the

tolhyperparameter of theSVCmodel to the interval [1e-5, 1e-3], the following code can be used:

from spotPython.hyperparameters.values import modify_hyper_parameter_bounds

modify_hyper_parameter_bounds(fun_control, "tol", bounds=[1e-5, 1e-3])

modify_hyper_parameter_bounds(fun_control, "probability", bounds=[0, 0])

fun_control["core_model_hyper_dict"]["tol"]{'type': 'float',

'default': 0.001,

'transform': 'None',

'lower': 1e-05,

'upper': 0.001}17.6.2 Modify hyperparameter of type factor

Factors can be modified with the modify_hyper_parameter_levels function. For example, to exclude the sigmoid kernel from the tuning, the kernel hyperparameter of the SVC model can be modified as follows:

from spotPython.hyperparameters.values import modify_hyper_parameter_levels

modify_hyper_parameter_levels(fun_control, "kernel", ["poly", "rbf"])

fun_control["core_model_hyper_dict"]["kernel"]{'levels': ['poly', 'rbf'],

'type': 'factor',

'default': 'rbf',

'transform': 'None',

'core_model_parameter_type': 'str',

'lower': 0,

'upper': 1}17.6.3 Optimizers

Optimizers are described in ?sec-optimizers-14.

17.7 Step 7: Selection of the Objective (Loss) Function

There are two metrics:

metric_riveris used for the river based evaluation viaeval_oml_iter_progressive.metric_sklearnis used for the sklearn based evaluation.

from sklearn.metrics import mean_absolute_error, accuracy_score, roc_curve, roc_auc_score, log_loss, mean_squared_error

fun_control.update({

"metric_sklearn": log_loss,

"weights": 1.0,

})metric_sklearn: Minimization and Maximization

- Because the

metric_sklearnis used for the sklearn based evaluation, it is important to know whether the metric should be minimized or maximized. - The

weightsparameter is used to indicate whether the metric should be minimized or maximized. - If

weightsis set to-1.0, the metric is maximized. - If

weightsis set to1.0, the metric is minimized, e.g.,weights = 1.0formean_absolute_error, orweights = -1.0forroc_auc_score.

17.7.1 Predict Classes or Class Probabilities

If the key "predict_proba" is set to True, the class probabilities are predicted. False is the default, i.e., the classes are predicted.

fun_control.update({

"predict_proba": False,

})17.8 Step 8: Calling the SPOT Function

17.8.1 The Objective Function

The objective function is selected next. It implements an interface from sklearn’s training, validation, and testing methods to spotPython.

from spotPython.fun.hypersklearn import HyperSklearn

fun = HyperSklearn().fun_sklearnThe following code snippet shows how to get the default hyperparameters as an array, so that they can be passed to the Spot function.

from spotPython.hyperparameters.values import get_default_hyperparameters_as_array

X_start = get_default_hyperparameters_as_array(fun_control)17.8.2 Run the Spot Optimizer

The class Spot [SOURCE] is the hyperparameter tuning workhorse. It is initialized with the following parameters:

fun: the objective functionfun_control: the dictionary with the control parameters for the objective functiondesign: the experimental designdesign_control: the dictionary with the control parameters for the experimental designsurrogate: the surrogate modelsurrogate_control: the dictionary with the control parameters for the surrogate modeloptimizer: the optimizeroptimizer_control: the dictionary with the control parameters for the optimizer

The total run time may exceed the specified max_time, because the initial design (here: init_size = INIT_SIZE as specified above) is always evaluated, even if this takes longer than max_time.

from spotPython.utils.init import design_control_init, surrogate_control_init

design_control = design_control_init()

set_control_key_value(control_dict=design_control,

key="init_size",

value=INIT_SIZE,

replace=True)

surrogate_control = surrogate_control_init(noise=True,

n_theta=2)

from spotPython.spot import spot

spot_tuner = spot.Spot(fun=fun,

fun_control=fun_control,

design_control=design_control,

surrogate_control=surrogate_control)

spot_tuner.run(X_start=X_start)spotPython tuning: 5.734217584632275 [----------] 1.74%

spotPython tuning: 5.734217584632275 [----------] 4.15%

spotPython tuning: 5.734217584632275 [#---------] 7.23%

spotPython tuning: 5.734217584632275 [#---------] 9.32%

spotPython tuning: 5.734217584632275 [#---------] 11.97%

spotPython tuning: 5.734217584632275 [#---------] 14.49%

spotPython tuning: 5.734217584632275 [##--------] 16.75%

spotPython tuning: 5.734217584632275 [##--------] 21.14%

spotPython tuning: 5.734217584632275 [###-------] 26.12%

spotPython tuning: 5.734217584632275 [###-------] 31.59%

spotPython tuning: 5.734217584632275 [####------] 37.41%

spotPython tuning: 5.734217584632275 [####------] 44.71%

spotPython tuning: 5.734217584632275 [#####-----] 51.15%

spotPython tuning: 5.734217584632275 [######----] 55.42%

spotPython tuning: 5.734217584632275 [######----] 59.07%

spotPython tuning: 5.734217584632275 [#######---] 68.45%

spotPython tuning: 5.734217584632275 [########--] 80.32%

spotPython tuning: 5.734217584632275 [#########-] 92.46%

spotPython tuning: 5.734217584632275 [##########] 100.00% Done...

{'CHECKPOINT_PATH': 'runs/saved_models/',

'DATASET_PATH': 'data/',

'PREFIX': '10',

'RESULTS_PATH': 'results/',

'TENSORBOARD_PATH': 'runs/',

'_L_in': None,

'_L_out': None,

'_torchmetric': None,

'accelerator': 'auto',

'converters': None,

'core_model': <class 'sklearn.svm._classes.SVC'>,

'core_model_hyper_dict': {'C': {'default': 1.0,

'lower': 0.1,

'transform': 'None',

'type': 'float',

'upper': 10.0},

'break_ties': {'core_model_parameter_type': 'bool',

'default': 0,

'levels': [0, 1],

'lower': 0,

'transform': 'None',

'type': 'factor',

'upper': 1},

'cache_size': {'default': 200,

'lower': 100,

'transform': 'None',

'type': 'float',

'upper': 400},

'coef0': {'default': 0.0,

'lower': 0.0,

'transform': 'None',

'type': 'float',

'upper': 0.0},

'degree': {'default': 3,

'lower': 3,

'transform': 'None',

'type': 'int',

'upper': 3},

'gamma': {'core_model_parameter_type': 'str',

'default': 'scale',

'levels': ['scale', 'auto'],

'lower': 0,

'transform': 'None',

'type': 'factor',

'upper': 1},

'kernel': {'core_model_parameter_type': 'str',

'default': 'rbf',

'levels': ['poly', 'rbf'],

'lower': 0,

'transform': 'None',

'type': 'factor',

'upper': 1},

'probability': {'core_model_parameter_type': 'bool',

'default': 0,

'levels': [0, 1],

'lower': 0,

'transform': 'None',

'type': 'factor',

'upper': 0},

'shrinking': {'core_model_parameter_type': 'bool',

'default': 0,

'levels': [0, 1],

'lower': 0,

'transform': 'None',

'type': 'factor',

'upper': 1},

'tol': {'default': 0.001,

'lower': 1e-05,

'transform': 'None',

'type': 'float',

'upper': 0.001}},

'core_model_hyper_dict_default': {'C': {'default': 1.0,

'lower': 0.1,

'transform': 'None',

'type': 'float',

'upper': 10.0},

'break_ties': {'core_model_parameter_type': 'bool',

'default': 0,

'levels': [0, 1],

'lower': 0,

'transform': 'None',

'type': 'factor',

'upper': 1},

'cache_size': {'default': 200,

'lower': 100,

'transform': 'None',

'type': 'float',

'upper': 400},

'coef0': {'default': 0.0,

'lower': 0.0,

'transform': 'None',

'type': 'float',

'upper': 0.0},

'degree': {'default': 3,

'lower': 3,

'transform': 'None',

'type': 'int',

'upper': 3},

'gamma': {'core_model_parameter_type': 'str',

'default': 'scale',

'levels': ['scale', 'auto'],

'lower': 0,

'transform': 'None',

'type': 'factor',

'upper': 1},

'kernel': {'core_model_parameter_type': 'str',

'default': 'rbf',

'levels': ['linear',

'poly',

'rbf',

'sigmoid'],

'lower': 0,

'transform': 'None',

'type': 'factor',

'upper': 3},

'probability': {'core_model_parameter_type': 'bool',

'default': 0,

'levels': [0, 1],

'lower': 0,

'transform': 'None',

'type': 'factor',

'upper': 1},

'shrinking': {'core_model_parameter_type': 'bool',

'default': 0,

'levels': [0, 1],

'lower': 0,

'transform': 'None',

'type': 'factor',

'upper': 1},

'tol': {'default': 0.001,

'lower': 0.0001,

'transform': 'None',

'type': 'float',

'upper': 0.01}},

'core_model_name': None,

'counter': 29,

'data': None,

'data_dir': './data',

'data_module': None,

'data_set': None,

'data_set_name': None,

'db_dict_name': None,

'design': None,

'device': None,

'devices': 1,

'enable_progress_bar': False,

'eval': None,

'fun_evals': inf,

'fun_repeats': 1,

'horizon': None,

'infill_criterion': 'y',

'k_folds': 3,

'log_graph': False,

'log_level': 50,

'loss_function': None,

'lower': array([1.e-01, 0.e+00, 3.e+00, 0.e+00, 0.e+00, 0.e+00, 0.e+00, 1.e-04,

1.e+02, 0.e+00]),

'max_surrogate_points': 30,

'max_time': 1,

'metric_params': {},

'metric_river': None,

'metric_sklearn': <function log_loss at 0x3a9bcf2e0>,

'metric_sklearn_name': None,

'metric_torch': None,

'model_dict': {},

'n_points': 1,

'n_samples': 350,

'n_total': None,

'noise': False,

'num_workers': 0,

'ocba_delta': 0,

'oml_grace_period': None,

'optimizer': None,

'path': None,

'predict_proba': False,

'prep_model': StandardScaler(),

'prep_model_name': None,

'progress_file': None,

'save_model': False,

'scenario': None,

'seed': 123,

'show_batch_interval': 1000000,

'show_models': False,

'show_progress': True,

'shuffle': None,

'sigma': 0.0,

'spot_tensorboard_path': 'runs/spot_logs/10_maans14_2024-04-22_00-28-38',

'spot_writer': <torch.utils.tensorboard.writer.SummaryWriter object at 0x3a8ab30d0>,

'target_column': 'y',

'target_type': None,

'task': None,

'test': x1 x2 y

0 0.811141 -0.243422 1.0

1 1.889618 -0.091949 1.0

2 -1.322321 1.406255 0.0

3 0.980227 0.657971 0.0

4 -0.011067 -0.194099 0.0

.. ... ... ...

145 1.331125 -0.598679 1.0

146 0.553564 0.898622 0.0

147 -0.531459 0.007683 0.0

148 1.142523 0.501602 0.0

149 2.126038 1.390414 0.0

[150 rows x 3 columns],

'test_seed': 1234,

'test_size': 0.4,

'tolerance_x': 1.4901161193847656e-08,

'train': x1 x2 y

0 1.960101 0.383172 0.0

1 2.354420 -0.536942 1.0

2 1.682186 -0.332108 0.0

3 1.856507 0.687220 1.0

4 1.925524 0.427413 1.0

.. ... ... ...

345 1.014021 1.486690 0.0

346 1.040602 -0.693202 1.0

347 1.420964 -0.798333 1.0

348 1.955329 -0.074111 1.0

349 0.144646 0.384148 0.0

[350 rows x 3 columns],

'upper': array([1.e+01, 3.e+00, 3.e+00, 1.e+00, 0.e+00, 1.e+00, 1.e+00, 1.e-02,

4.e+02, 1.e+00]),

'var_name': ['C',

'kernel',

'degree',

'gamma',

'coef0',

'shrinking',

'probability',

'tol',

'cache_size',

'break_ties'],

'var_type': ['float',

'factor',

'int',

'factor',

'float',

'factor',

'factor',

'float',

'float',

'factor'],

'verbosity': 0,

'weight_coeff': 0.0,

'weights': 1.0,

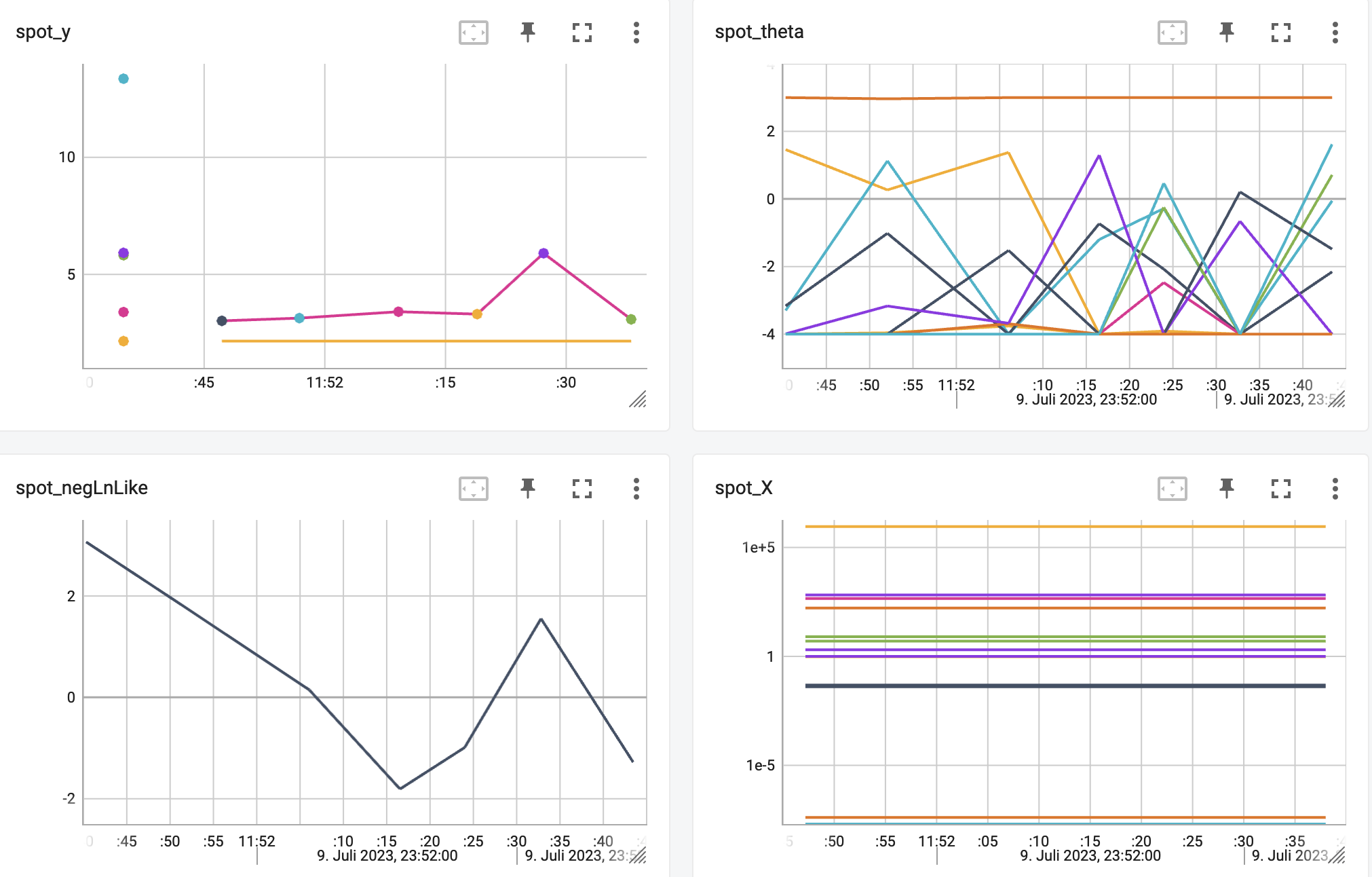

'weights_entry': None}<spotPython.spot.spot.Spot at 0x3ab0d77d0>17.8.3 TensorBoard

Now we can start TensorBoard in the background with the following command, where ./runs is the default directory for the TensorBoard log files:

tensorboard --logdir="./runs"The TensorBoard path can be printed with the following command:

from spotPython.utils.init import get_tensorboard_path

get_tensorboard_path(fun_control)'runs/'We can access the TensorBoard web server with the following URL:

http://localhost:6006/The TensorBoard plot illustrates how spotPython can be used as a microscope for the internal mechanisms of the surrogate-based optimization process. Here, one important parameter, the learning rate \(\theta\) of the Kriging surrogate [SOURCE] is plotted against the number of optimization steps.

17.9 Step 9: Results

After the hyperparameter tuning run is finished, the results can be saved and reloaded with the following commands:

from spotPython.utils.file import save_pickle, load_pickle

from spotPython.utils.init import get_experiment_name

experiment_name = get_experiment_name(PREFIX)

SAVE_AND_LOAD = False

if SAVE_AND_LOAD == True:

save_pickle(spot_tuner, experiment_name)

spot_tuner = load_pickle(experiment_name)After the hyperparameter tuning run is finished, the progress of the hyperparameter tuning can be visualized. The black points represent the performace values (score or metric) of hyperparameter configurations from the initial design, whereas the red points represents the hyperparameter configurations found by the surrogate model based optimization.

spot_tuner.plot_progress(log_y=True, filename="./figures/" + experiment_name+"_progress.pdf")

Results can also be printed in tabular form.

print(gen_design_table(fun_control=fun_control, spot=spot_tuner))| name | type | default | lower | upper | tuned | transform | importance | stars |

|-------------|--------|-----------|---------|---------|----------------------|-------------|--------------|---------|

| C | float | 1.0 | 0.1 | 10.0 | 2.394471655384338 | None | 0.20 | . |

| kernel | factor | rbf | 0.0 | 1.0 | rbf | None | 100.00 | *** |

| degree | int | 3 | 3.0 | 3.0 | 3.0 | None | 0.00 | |

| gamma | factor | scale | 0.0 | 1.0 | scale | None | 0.00 | |

| coef0 | float | 0.0 | 0.0 | 0.0 | 0.0 | None | 0.00 | |

| shrinking | factor | 0 | 0.0 | 1.0 | 0 | None | 0.45 | . |

| probability | factor | 0 | 0.0 | 0.0 | 0 | None | 0.00 | |

| tol | float | 0.001 | 1e-05 | 0.001 | 0.000982585315792582 | None | 0.02 | |

| cache_size | float | 200.0 | 100.0 | 400.0 | 375.6371648003268 | None | 0.00 | |

| break_ties | factor | 0 | 0.0 | 1.0 | 0 | None | 0.00 | |A histogram can be used to visualize the most important hyperparameters.

spot_tuner.plot_importance(threshold=0.0025, filename="./figures/" + experiment_name+"_importance.pdf")

17.10 Get Default Hyperparameters

The default hyperparameters, whihc will be used for a comparion with the tuned hyperparameters, can be obtained with the following commands:

from spotPython.hyperparameters.values import get_one_core_model_from_X

from spotPython.hyperparameters.values import get_default_hyperparameters_as_array

X_start = get_default_hyperparameters_as_array(fun_control)

model_default = get_one_core_model_from_X(X_start, fun_control, default=True)

model_defaultSVC(break_ties=0, cache_size=200.0, probability=0, shrinking=0)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

SVC(break_ties=0, cache_size=200.0, probability=0, shrinking=0)

17.11 Get SPOT Results

In a similar way, we can obtain the hyperparameters found by spotPython.

from spotPython.hyperparameters.values import get_one_core_model_from_X

X = spot_tuner.to_all_dim(spot_tuner.min_X.reshape(1,-1))

model_spot = get_one_core_model_from_X(X, fun_control)17.11.1 Plot: Compare Predictions

from spotPython.plot.validation import plot_roc

plot_roc(model_list=[model_default, model_spot], fun_control= fun_control, model_names=["Default", "Spot"])

from spotPython.plot.validation import plot_confusion_matrix

plot_confusion_matrix(model=model_default, fun_control=fun_control, title = "Default")

plot_confusion_matrix(model=model_spot, fun_control=fun_control, title="SPOT")

min(spot_tuner.y), max(spot_tuner.y)(5.734217584632275, 7.782152436286657)17.11.2 Detailed Hyperparameter Plots

spot_tuner.plot_important_hyperparameter_contour(filename=None)C: 0.19891447199625806

kernel: 100.0

gamma: 0.00259286725080472

shrinking: 0.4457896110181442

tol: 0.01887455725112635

cache_size: 0.00259286725080472

break_ties: 0.00259286725080472

impo: [['C', 0.19891447199625806], ['kernel', 100.0], ['gamma', 0.00259286725080472], ['shrinking', 0.4457896110181442], ['tol', 0.01887455725112635], ['cache_size', 0.00259286725080472], ['break_ties', 0.00259286725080472]]

indices: [1, 3, 0, 4, 2, 5, 6]

indices after max_imp selection: [1, 3, 0, 4, 2, 5, 6]

17.11.3 Parallel Coordinates Plot

spot_tuner.parallel_plot()17.11.4 Plot all Combinations of Hyperparameters

- Warning: this may take a while.

PLOT_ALL = False

if PLOT_ALL:

n = spot_tuner.k

for i in range(n-1):

for j in range(i+1, n):

spot_tuner.plot_contour(i=i, j=j, min_z=min_z, max_z = max_z)