import warnings

warnings.filterwarnings("ignore")

import json

import numpy as np

from spotoptim import SpotOptim

from spotoptim.function import rosenbrock5 Benchmarking SpotOptim with Sklearn Kriging (Matern Kernel) on 6D Rosenbrock and 10D Michalewicz Functions

These test functions were used during the Dagstuhl Seminar 25451 Bayesian Optimisation (Nov 02 – Nov 07, 2025), see here.

This notebook demonstrates the use of SpotOptim with sklearn’s Gaussian Process Regressor as a surrogate model.

5.1 SpotOptim with Sklearn Kriging in 6 Dimensions: Rosenbrock Function

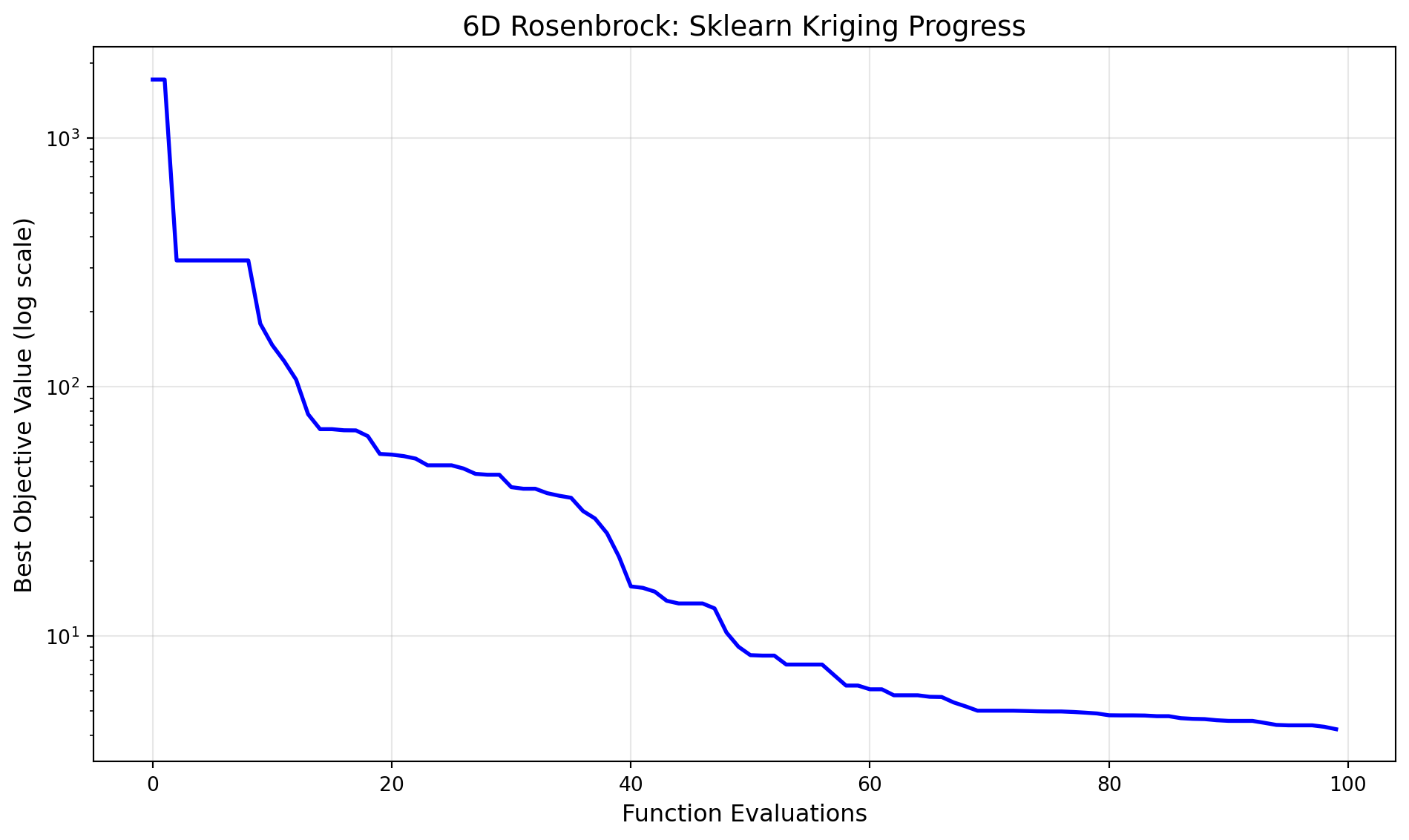

This section demonstrates how to use the SpotOptim class with sklearn’s Gaussian Process Regressor (using Matern kernel) as a surrogate on the 6-dimensional Rosenbrock function. We use a maximum of 100 function evaluations.

5.1.1 Define the 6D Rosenbrock Function

dim = 6

lower = np.full(dim, -2.0)

upper = np.full(dim, 2.0)

bounds = list(zip(lower, upper))

fun = rosenbrock

max_iter = 1005.1.2 Set up SpotOptim Parameters

n_initial = dim

seed = 3215.1.3 Sklearn Gaussian Process Regressor as Surrogate

from sklearn.gaussian_process import GaussianProcessRegressor

from sklearn.gaussian_process.kernels import Matern, ConstantKernel

# Use a Matern kernel instead of the standard RBF kernel

kernel = ConstantKernel(1.0, (1e-2, 1e12)) * Matern(

length_scale=1.0,

length_scale_bounds=(1e-4, 1e2),

nu=2.5

)

surrogate = GaussianProcessRegressor(kernel=kernel, n_restarts_optimizer=100)

# Create SpotOptim instance with sklearn surrogate

opt_rosen = SpotOptim(

fun=fun,

bounds=bounds,

n_initial=n_initial,

max_iter=max_iter,

surrogate=surrogate,

seed=seed,

verbose=1

)

# Run optimization

result_rosen = opt_rosen.optimize()TensorBoard logging disabled

Initial best: f(x) = 321.834153

Iter 1 | Best: 321.834153 | Curr: 3523.030600 | Rate: 0.00 | Evals: 7.0%

Iter 2 | Best: 321.834153 | Curr: 1535.228299 | Rate: 0.00 | Evals: 8.0%

Iter 3 | Best: 321.834153 | Curr: 326.969698 | Rate: 0.00 | Evals: 9.0%

Iter 4 | Best: 179.356120 | Rate: 0.25 | Evals: 10.0%

Iter 5 | Best: 147.216512 | Rate: 0.40 | Evals: 11.0%

Iter 6 | Best: 126.879058 | Rate: 0.50 | Evals: 12.0%

Iter 7 | Best: 106.910445 | Rate: 0.57 | Evals: 13.0%

Iter 8 | Best: 77.690090 | Rate: 0.62 | Evals: 14.0%

Iter 9 | Best: 67.650765 | Rate: 0.67 | Evals: 15.0%

Iter 10 | Best: 67.650765 | Curr: 70.996531 | Rate: 0.60 | Evals: 16.0%

Iter 11 | Best: 66.959383 | Rate: 0.64 | Evals: 17.0%

Iter 12 | Best: 66.886607 | Rate: 0.67 | Evals: 18.0%

Iter 13 | Best: 63.396091 | Rate: 0.69 | Evals: 19.0%

Iter 14 | Best: 53.830939 | Rate: 0.71 | Evals: 20.0%

Iter 15 | Best: 53.480172 | Rate: 0.73 | Evals: 21.0%

Iter 16 | Best: 52.741893 | Rate: 0.75 | Evals: 22.0%

Iter 17 | Best: 51.637049 | Rate: 0.76 | Evals: 23.0%

Iter 18 | Best: 48.385433 | Rate: 0.78 | Evals: 24.0%

Iter 19 | Best: 48.385433 | Curr: 48.448189 | Rate: 0.74 | Evals: 25.0%

Iter 20 | Best: 48.057586 | Rate: 0.75 | Evals: 26.0%

Iter 21 | Best: 48.057586 | Curr: 48.216762 | Rate: 0.71 | Evals: 27.0%

Iter 22 | Best: 47.104651 | Rate: 0.73 | Evals: 28.0%

Iter 23 | Best: 45.850796 | Rate: 0.74 | Evals: 29.0%

Iter 24 | Best: 45.062114 | Rate: 0.75 | Evals: 30.0%

Iter 25 | Best: 43.538121 | Rate: 0.76 | Evals: 31.0%

Iter 26 | Best: 43.468547 | Rate: 0.77 | Evals: 32.0%

Iter 27 | Best: 43.468547 | Curr: 43.881459 | Rate: 0.74 | Evals: 33.0%

Iter 28 | Best: 39.675382 | Rate: 0.75 | Evals: 34.0%

Iter 29 | Best: 38.393633 | Rate: 0.76 | Evals: 35.0%

Iter 30 | Best: 35.523588 | Rate: 0.77 | Evals: 36.0%

Iter 31 | Best: 35.484382 | Rate: 0.77 | Evals: 37.0%

Iter 32 | Best: 35.484382 | Curr: 35.825494 | Rate: 0.75 | Evals: 38.0%

Iter 33 | Best: 34.396823 | Rate: 0.76 | Evals: 39.0%

Iter 34 | Best: 34.023846 | Rate: 0.76 | Evals: 40.0%

Iter 35 | Best: 31.148824 | Rate: 0.77 | Evals: 41.0%

Iter 36 | Best: 27.483742 | Rate: 0.78 | Evals: 42.0%

Iter 37 | Best: 27.483742 | Curr: 30.312244 | Rate: 0.76 | Evals: 43.0%

Iter 38 | Best: 25.628007 | Rate: 0.76 | Evals: 44.0%

Iter 39 | Best: 22.970574 | Rate: 0.77 | Evals: 45.0%

Iter 40 | Best: 22.970574 | Curr: 23.633260 | Rate: 0.75 | Evals: 46.0%

Iter 41 | Best: 22.074924 | Rate: 0.76 | Evals: 47.0%

Iter 42 | Best: 21.621256 | Rate: 0.76 | Evals: 48.0%

Iter 43 | Best: 21.621256 | Curr: 22.178193 | Rate: 0.74 | Evals: 49.0%

Iter 44 | Best: 21.621256 | Curr: 21.696325 | Rate: 0.73 | Evals: 50.0%

Iter 45 | Best: 19.219823 | Rate: 0.73 | Evals: 51.0%

Iter 46 | Best: 14.774081 | Rate: 0.74 | Evals: 52.0%

Iter 47 | Best: 14.774081 | Curr: 14.935796 | Rate: 0.72 | Evals: 53.0%

Iter 48 | Best: 14.735057 | Rate: 0.73 | Evals: 54.0%

Iter 49 | Best: 14.735057 | Curr: 14.887048 | Rate: 0.71 | Evals: 55.0%

Iter 50 | Best: 14.570983 | Rate: 0.72 | Evals: 56.0%

Iter 51 | Best: 14.299982 | Rate: 0.73 | Evals: 57.0%

Iter 52 | Best: 11.894840 | Rate: 0.73 | Evals: 58.0%

Iter 53 | Best: 7.989747 | Rate: 0.74 | Evals: 59.0%

Iter 54 | Best: 6.748924 | Rate: 0.74 | Evals: 60.0%

Iter 55 | Best: 6.593840 | Rate: 0.75 | Evals: 61.0%

Iter 56 | Best: 5.990110 | Rate: 0.75 | Evals: 62.0%

Iter 57 | Best: 5.713477 | Rate: 0.75 | Evals: 63.0%

Iter 58 | Best: 5.471658 | Rate: 0.76 | Evals: 64.0%

Iter 59 | Best: 5.466964 | Rate: 0.76 | Evals: 65.0%

Iter 60 | Best: 5.466964 | Curr: 5.496778 | Rate: 0.75 | Evals: 66.0%

Iter 61 | Best: 5.436363 | Rate: 0.75 | Evals: 67.0%

Iter 62 | Best: 5.380911 | Rate: 0.76 | Evals: 68.0%

Iter 63 | Best: 5.319051 | Rate: 0.76 | Evals: 69.0%

Iter 64 | Best: 5.261751 | Rate: 0.77 | Evals: 70.0%

Iter 65 | Best: 5.238434 | Rate: 0.77 | Evals: 71.0%

Iter 66 | Best: 5.122744 | Rate: 0.77 | Evals: 72.0%

Iter 67 | Best: 5.122744 | Curr: 5.166099 | Rate: 0.76 | Evals: 73.0%

Iter 68 | Best: 5.107638 | Rate: 0.76 | Evals: 74.0%

Iter 69 | Best: 5.098379 | Rate: 0.77 | Evals: 75.0%

Iter 70 | Best: 5.098379 | Curr: 5.118825 | Rate: 0.76 | Evals: 76.0%

Iter 71 | Best: 5.083977 | Rate: 0.76 | Evals: 77.0%

Iter 72 | Best: 5.083977 | Curr: 5.099803 | Rate: 0.75 | Evals: 78.0%

Iter 73 | Best: 5.067823 | Rate: 0.75 | Evals: 79.0%

Iter 74 | Best: 5.055556 | Rate: 0.76 | Evals: 80.0%

Iter 75 | Best: 5.055556 | Curr: 5.061091 | Rate: 0.75 | Evals: 81.0%

Iter 76 | Best: 5.052843 | Rate: 0.75 | Evals: 82.0%

Iter 77 | Best: 5.031295 | Rate: 0.75 | Evals: 83.0%

Iter 78 | Best: 5.016746 | Rate: 0.76 | Evals: 84.0%

Iter 79 | Best: 4.994297 | Rate: 0.76 | Evals: 85.0%

Iter 80 | Best: 4.906668 | Rate: 0.76 | Evals: 86.0%

Iter 81 | Best: 4.906668 | Curr: 4.928198 | Rate: 0.75 | Evals: 87.0%

Iter 82 | Best: 4.880380 | Rate: 0.76 | Evals: 88.0%

Iter 83 | Best: 4.743828 | Rate: 0.76 | Evals: 89.0%

Iter 84 | Best: 4.701228 | Rate: 0.76 | Evals: 90.0%

Iter 85 | Best: 4.701228 | Curr: 4.736901 | Rate: 0.75 | Evals: 91.0%

Iter 86 | Best: 4.701228 | Curr: 4.727649 | Rate: 0.74 | Evals: 92.0%

Iter 87 | Best: 4.689752 | Rate: 0.75 | Evals: 93.0%

Iter 88 | Best: 4.689752 | Curr: 4.701422 | Rate: 0.74 | Evals: 94.0%

Iter 89 | Best: 4.685291 | Rate: 0.74 | Evals: 95.0%

Iter 90 | Best: 4.685291 | Curr: 4.771983 | Rate: 0.73 | Evals: 96.0%

Iter 91 | Best: 4.532723 | Rate: 0.74 | Evals: 97.0%

Iter 92 | Best: 4.501827 | Rate: 0.74 | Evals: 98.0%

Iter 93 | Best: 4.459025 | Rate: 0.74 | Evals: 99.0%

Iter 94 | Best: 4.442562 | Rate: 0.74 | Evals: 100.0%print(f"[6D] Sklearn Kriging: min y = {result_rosen.fun:.4f} at x = {result_rosen.x}")

print(f"Number of function evaluations: {result_rosen.nfev}")

print(f"Number of iterations: {result_rosen.nit}")[6D] Sklearn Kriging: min y = 4.4426 at x = [ 0.28207773 0.07110936 0.01142581 -0.0095834 0.03223602 0.0095451 ]

Number of function evaluations: 100

Number of iterations: 945.1.4 Visualize Optimization Progress

import matplotlib.pyplot as plt

# Plot the optimization progress

plt.figure(figsize=(10, 6))

plt.semilogy(np.minimum.accumulate(opt_rosen.y_), 'b-', linewidth=2)

plt.xlabel('Function Evaluations', fontsize=12)

plt.ylabel('Best Objective Value (log scale)', fontsize=12)

plt.title('6D Rosenbrock: Sklearn Kriging Progress', fontsize=14)

plt.grid(True, alpha=0.3)

plt.tight_layout()

plt.show()

5.1.5 Evaluation of Multiple Repeats

To perform 30 repeats and collect statistics:

# Perform 30 independent runs

n_repeats = 30

results = []

print(f"Running {n_repeats} independent optimizations...")

for i in range(n_repeats):

kernel_i = ConstantKernel(1.0, (1e-2, 1e12)) * Matern(

length_scale=1.0,

length_scale_bounds=(1e-4, 1e2),

nu=2.5

)

surrogate_i = GaussianProcessRegressor(kernel=kernel_i, n_restarts_optimizer=100)

opt_i = SpotOptim(

fun=fun,

bounds=bounds,

n_initial=n_initial,

max_iter=max_iter,

surrogate=surrogate_i,

seed=seed + i, # Different seed for each run

verbose=0

)

result_i = opt_i.optimize()

results.append(result_i.fun)

if (i + 1) % 10 == 0:

print(f" Completed {i + 1}/{n_repeats} runs")

# Compute statistics

mean_result = np.mean(results)

std_result = np.std(results)

min_result = np.min(results)

max_result = np.max(results)

print(f"\nResults over {n_repeats} runs:")

print(f" Mean of best values: {mean_result:.6f}")

print(f" Std of best values: {std_result:.6f}")

print(f" Min of best values: {min_result:.6f}")

print(f" Max of best values: {max_result:.6f}")5.2 SpotOptim with Sklearn Kriging in 10 Dimensions: Michalewicz Function

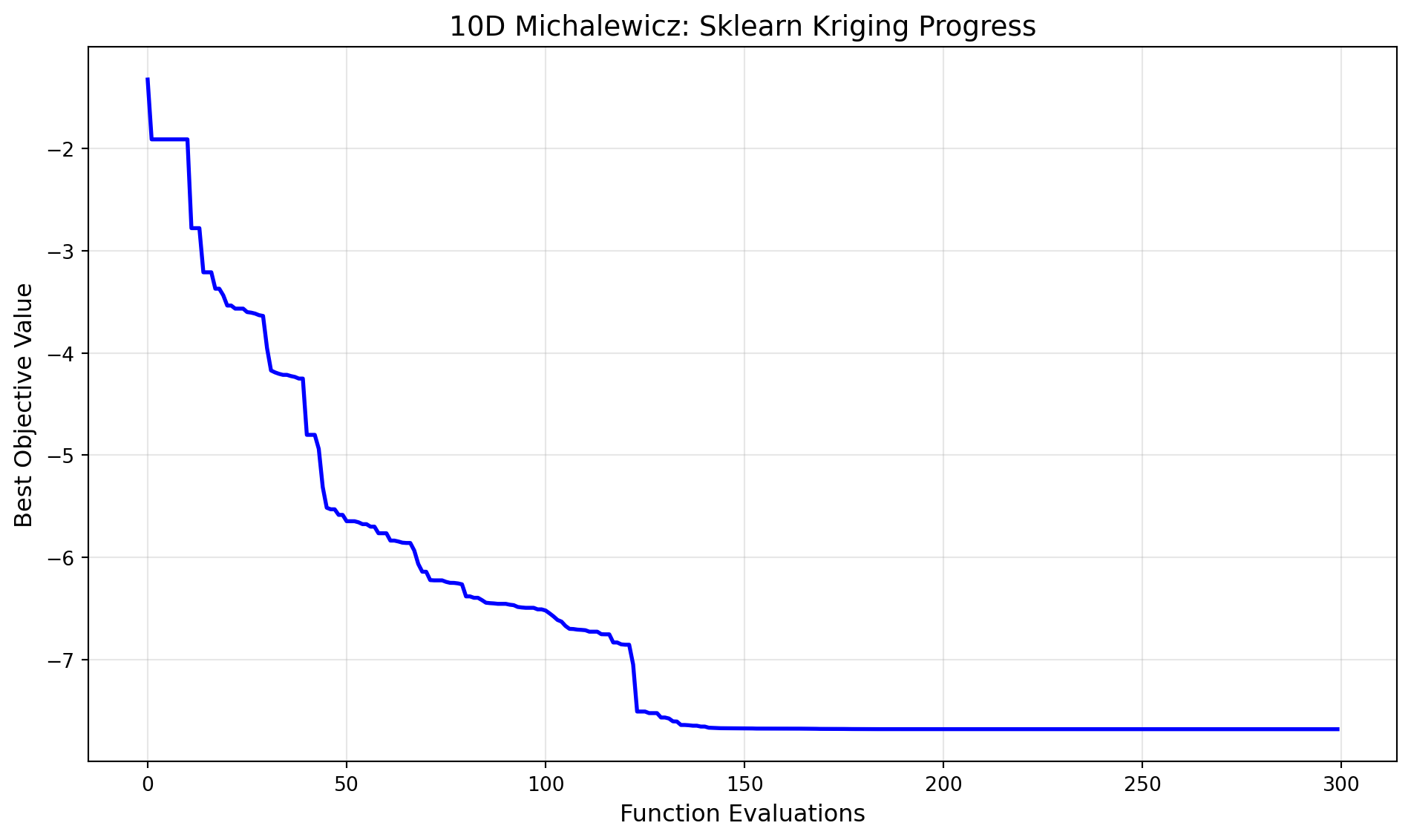

This section demonstrates how to use the SpotOptim class with sklearn’s Gaussian Process Regressor (using Matern kernel) as a surrogate on the 10-dimensional Michalewicz function. We use a maximum of 300 function evaluations.

5.2.1 Define the 10D Michalewicz Function

from spotoptim.function import michalewicz

dim = 10

lower = np.full(dim, 0.0)

upper = np.full(dim, np.pi)

bounds = list(zip(lower, upper))

fun = michalewicz

max_iter = 3005.2.2 Set up SpotOptim Parameters

n_initial = dim

seed = 3215.2.3 Sklearn Gaussian Process Regressor as Surrogate

from sklearn.gaussian_process import GaussianProcessRegressor

from sklearn.gaussian_process.kernels import Matern, ConstantKernel

# Use a Matern kernel instead of the standard RBF kernel

kernel = ConstantKernel(1.0, (1e-2, 1e12)) * Matern(

length_scale=1.0,

length_scale_bounds=(1e-4, 1e2),

nu=2.5

)

surrogate = GaussianProcessRegressor(kernel=kernel, n_restarts_optimizer=100)

# Create SpotOptim instance with sklearn surrogate

opt_micha = SpotOptim(

fun=fun,

bounds=bounds,

n_initial=n_initial,

max_iter=max_iter,

surrogate=surrogate,

seed=seed,

verbose=1

)

# Run optimization

result_micha = opt_micha.optimize()TensorBoard logging disabled

Initial best: f(x) = -1.909129

Iter 1 | Best: -1.909129 | Curr: -0.467202 | Rate: 0.00 | Evals: 3.7%

Iter 2 | Best: -2.778262 | Rate: 0.50 | Evals: 4.0%

Iter 3 | Best: -2.778262 | Curr: -1.592337 | Rate: 0.33 | Evals: 4.3%

Iter 4 | Best: -2.778262 | Curr: -1.662544 | Rate: 0.25 | Evals: 4.7%

Iter 5 | Best: -3.211991 | Rate: 0.40 | Evals: 5.0%

Iter 6 | Best: -3.211991 | Curr: -2.798917 | Rate: 0.33 | Evals: 5.3%

Iter 7 | Best: -3.211991 | Curr: -2.988576 | Rate: 0.29 | Evals: 5.7%

Iter 8 | Best: -3.350611 | Rate: 0.38 | Evals: 6.0%

Iter 9 | Best: -3.350611 | Curr: -3.217390 | Rate: 0.33 | Evals: 6.3%

Optimizer candidate 1/3 was duplicate/invalid.

Iter 10 | Best: -3.350611 | Curr: -1.168629 | Rate: 0.30 | Evals: 6.7%

Iter 11 | Best: -3.420176 | Rate: 0.36 | Evals: 7.0%

Iter 12 | Best: -3.477838 | Rate: 0.42 | Evals: 7.3%

Optimizer candidate 1/3 was duplicate/invalid.

Iter 13 | Best: -3.477838 | Curr: -1.449586 | Rate: 0.38 | Evals: 7.7%

Iter 14 | Best: -3.477838 | Curr: -1.352781 | Rate: 0.36 | Evals: 8.0%

Iter 15 | Best: -3.477838 | Curr: -3.471892 | Rate: 0.33 | Evals: 8.3%

Iter 16 | Best: -3.541510 | Rate: 0.38 | Evals: 8.7%

Iter 17 | Best: -3.541510 | Curr: -1.622355 | Rate: 0.35 | Evals: 9.0%

Iter 18 | Best: -3.541510 | Curr: -3.502476 | Rate: 0.33 | Evals: 9.3%

Iter 19 | Best: -3.541510 | Curr: -3.497143 | Rate: 0.32 | Evals: 9.7%

Iter 20 | Best: -3.541510 | Curr: -1.760444 | Rate: 0.30 | Evals: 10.0%

Iter 21 | Best: -3.541510 | Curr: -1.832617 | Rate: 0.29 | Evals: 10.3%

Iter 22 | Best: -3.623121 | Rate: 0.32 | Evals: 10.7%

Iter 23 | Best: -3.623121 | Curr: -0.786191 | Rate: 0.30 | Evals: 11.0%

Iter 24 | Best: -3.645627 | Rate: 0.33 | Evals: 11.3%

Optimizer candidate 1/3 was duplicate/invalid.

Iter 25 | Best: -3.645627 | Curr: -0.913552 | Rate: 0.32 | Evals: 11.7%

Iter 26 | Best: -3.879507 | Rate: 0.35 | Evals: 12.0%

Iter 27 | Best: -4.718084 | Rate: 0.37 | Evals: 12.3%

Iter 28 | Best: -4.718084 | Curr: -4.066472 | Rate: 0.36 | Evals: 12.7%

Iter 29 | Best: -4.756804 | Rate: 0.38 | Evals: 13.0%

Iter 30 | Best: -4.756804 | Curr: -4.751526 | Rate: 0.37 | Evals: 13.3%

Iter 31 | Best: -4.760582 | Rate: 0.39 | Evals: 13.7%

Iter 32 | Best: -4.760582 | Curr: -4.646373 | Rate: 0.38 | Evals: 14.0%

Iter 33 | Best: -4.898053 | Rate: 0.39 | Evals: 14.3%

Iter 34 | Best: -4.898053 | Curr: -1.857547 | Rate: 0.38 | Evals: 14.7%

Iter 35 | Best: -4.940037 | Rate: 0.40 | Evals: 15.0%

Iter 36 | Best: -4.953219 | Rate: 0.42 | Evals: 15.3%

Iter 37 | Best: -4.953219 | Curr: -1.925003 | Rate: 0.41 | Evals: 15.7%

Iter 38 | Best: -4.953219 | Curr: -4.892561 | Rate: 0.39 | Evals: 16.0%

Iter 39 | Best: -4.987714 | Rate: 0.41 | Evals: 16.3%

Iter 40 | Best: -4.987714 | Curr: -4.986228 | Rate: 0.40 | Evals: 16.7%

Iter 41 | Best: -4.992740 | Rate: 0.41 | Evals: 17.0%

Iter 42 | Best: -4.992740 | Curr: -1.966305 | Rate: 0.40 | Evals: 17.3%

Iter 43 | Best: -4.992740 | Curr: -1.989955 | Rate: 0.40 | Evals: 17.7%

Iter 44 | Best: -4.992740 | Curr: -4.988447 | Rate: 0.39 | Evals: 18.0%

Iter 45 | Best: -5.055589 | Rate: 0.40 | Evals: 18.3%

Iter 46 | Best: -5.163321 | Rate: 0.41 | Evals: 18.7%

Iter 47 | Best: -5.330200 | Rate: 0.43 | Evals: 19.0%

Iter 48 | Best: -5.330200 | Curr: -5.315522 | Rate: 0.42 | Evals: 19.3%

Iter 49 | Best: -5.423851 | Rate: 0.43 | Evals: 19.7%

Iter 50 | Best: -5.487307 | Rate: 0.44 | Evals: 20.0%

Iter 51 | Best: -5.514821 | Rate: 0.45 | Evals: 20.3%

Iter 52 | Best: -5.514821 | Curr: -5.513204 | Rate: 0.44 | Evals: 20.7%

Iter 53 | Best: -5.544603 | Rate: 0.45 | Evals: 21.0%

Iter 54 | Best: -5.544603 | Curr: -5.498218 | Rate: 0.44 | Evals: 21.3%

Iter 55 | Best: -5.544603 | Curr: -2.013015 | Rate: 0.44 | Evals: 21.7%

Iter 56 | Best: -5.544603 | Curr: -0.986604 | Rate: 0.43 | Evals: 22.0%

Iter 57 | Best: -5.710968 | Rate: 0.44 | Evals: 22.3%

Iter 58 | Best: -5.740085 | Rate: 0.45 | Evals: 22.7%

Iter 59 | Best: -5.740085 | Curr: -5.731862 | Rate: 0.44 | Evals: 23.0%

Iter 60 | Best: -5.740085 | Curr: -2.137932 | Rate: 0.43 | Evals: 23.3%

Iter 61 | Best: -5.749742 | Rate: 0.44 | Evals: 23.7%

Iter 62 | Best: -5.751414 | Rate: 0.45 | Evals: 24.0%

Iter 63 | Best: -5.751414 | Curr: -5.723542 | Rate: 0.44 | Evals: 24.3%

Iter 64 | Best: -5.751414 | Curr: -1.490073 | Rate: 0.44 | Evals: 24.7%

Iter 65 | Best: -5.751414 | Curr: -2.196075 | Rate: 0.43 | Evals: 25.0%

Iter 66 | Best: -5.977776 | Rate: 0.44 | Evals: 25.3%

Iter 67 | Best: -5.990616 | Rate: 0.45 | Evals: 25.7%

Iter 68 | Best: -5.990616 | Curr: -2.003978 | Rate: 0.44 | Evals: 26.0%

Iter 69 | Best: -5.991110 | Rate: 0.45 | Evals: 26.3%

Iter 70 | Best: -6.002744 | Rate: 0.46 | Evals: 26.7%

Iter 71 | Best: -6.048590 | Rate: 0.46 | Evals: 27.0%

Iter 72 | Best: -6.056870 | Rate: 0.47 | Evals: 27.3%

Iter 73 | Best: -6.057568 | Rate: 0.48 | Evals: 27.7%

Iter 74 | Best: -6.063140 | Rate: 0.49 | Evals: 28.0%

Iter 75 | Best: -6.063140 | Curr: -1.112713 | Rate: 0.48 | Evals: 28.3%

Iter 76 | Best: -6.063140 | Curr: -6.050027 | Rate: 0.47 | Evals: 28.7%

Iter 77 | Best: -6.164413 | Rate: 0.48 | Evals: 29.0%

Iter 78 | Best: -6.164413 | Curr: -2.218945 | Rate: 0.47 | Evals: 29.3%

Iter 79 | Best: -6.164413 | Curr: -6.164111 | Rate: 0.47 | Evals: 29.7%

Iter 80 | Best: -6.170468 | Rate: 0.47 | Evals: 30.0%

Iter 81 | Best: -6.170468 | Curr: -2.224685 | Rate: 0.47 | Evals: 30.3%

Iter 82 | Best: -6.170468 | Curr: -2.010742 | Rate: 0.46 | Evals: 30.7%

Iter 83 | Best: -6.170468 | Curr: -2.015806 | Rate: 0.46 | Evals: 31.0%

Iter 84 | Best: -6.183718 | Rate: 0.46 | Evals: 31.3%

Iter 85 | Best: -6.183718 | Curr: -6.182447 | Rate: 0.46 | Evals: 31.7%

Iter 86 | Best: -6.195052 | Rate: 0.47 | Evals: 32.0%

Iter 87 | Best: -6.195052 | Curr: -2.029081 | Rate: 0.46 | Evals: 32.3%

Iter 88 | Best: -6.195426 | Rate: 0.47 | Evals: 32.7%

Iter 89 | Best: -6.200572 | Rate: 0.47 | Evals: 33.0%

Iter 90 | Best: -6.200572 | Curr: -2.030962 | Rate: 0.47 | Evals: 33.3%

Iter 91 | Best: -6.200572 | Curr: -1.124819 | Rate: 0.46 | Evals: 33.7%

Iter 92 | Best: -6.239740 | Rate: 0.47 | Evals: 34.0%

Iter 93 | Best: -6.239740 | Curr: -6.237672 | Rate: 0.46 | Evals: 34.3%

Iter 94 | Best: -6.251388 | Rate: 0.47 | Evals: 34.7%

Iter 95 | Best: -6.251388 | Curr: -2.225876 | Rate: 0.46 | Evals: 35.0%

Iter 96 | Best: -6.251388 | Curr: -0.786240 | Rate: 0.46 | Evals: 35.3%

Iter 97 | Best: -6.251388 | Curr: -2.224921 | Rate: 0.45 | Evals: 35.7%

Iter 98 | Best: -6.251388 | Curr: -2.293240 | Rate: 0.45 | Evals: 36.0%

Iter 99 | Best: -6.251388 | Curr: -2.303387 | Rate: 0.44 | Evals: 36.3%

Iter 100 | Best: -6.254509 | Rate: 0.45 | Evals: 36.7%

Iter 101 | Best: -6.254509 | Curr: -2.304992 | Rate: 0.45 | Evals: 37.0%

Optimizer candidate 1/3 was duplicate/invalid.

Iter 102 | Best: -6.254509 | Curr: -1.758762 | Rate: 0.44 | Evals: 37.3%

Iter 103 | Best: -6.254509 | Curr: -2.362865 | Rate: 0.44 | Evals: 37.7%

Iter 104 | Best: -6.254509 | Curr: -2.348322 | Rate: 0.44 | Evals: 38.0%

Iter 105 | Best: -6.254509 | Curr: -2.027437 | Rate: 0.43 | Evals: 38.3%

Iter 106 | Best: -6.254509 | Curr: -2.391746 | Rate: 0.43 | Evals: 38.7%

Iter 107 | Best: -6.254509 | Curr: -6.253186 | Rate: 0.43 | Evals: 39.0%

Iter 108 | Best: -6.277164 | Rate: 0.43 | Evals: 39.3%

Iter 109 | Best: -6.277425 | Rate: 0.44 | Evals: 39.7%

Iter 110 | Best: -6.277425 | Curr: -6.276328 | Rate: 0.44 | Evals: 40.0%

Iter 111 | Best: -6.288893 | Rate: 0.44 | Evals: 40.3%

Iter 112 | Best: -6.289784 | Rate: 0.44 | Evals: 40.7%

Iter 113 | Best: -6.291421 | Rate: 0.45 | Evals: 41.0%

Iter 114 | Best: -6.293083 | Rate: 0.46 | Evals: 41.3%

Iter 115 | Best: -6.293083 | Curr: -6.292970 | Rate: 0.46 | Evals: 41.7%

Iter 116 | Best: -6.293643 | Rate: 0.46 | Evals: 42.0%

Iter 117 | Best: -6.293996 | Rate: 0.47 | Evals: 42.3%

Iter 118 | Best: -6.293996 | Curr: -6.293948 | Rate: 0.47 | Evals: 42.7%

Iter 119 | Best: -6.293996 | Curr: -1.490371 | Rate: 0.47 | Evals: 43.0%

Iter 120 | Best: -6.301918 | Rate: 0.48 | Evals: 43.3%

Iter 121 | Best: -6.301918 | Curr: -0.775860 | Rate: 0.48 | Evals: 43.7%

Iter 122 | Best: -6.302789 | Rate: 0.48 | Evals: 44.0%

Iter 123 | Best: -6.302789 | Curr: -6.302711 | Rate: 0.48 | Evals: 44.3%

Iter 124 | Best: -6.304016 | Rate: 0.48 | Evals: 44.7%

Iter 125 | Best: -6.308309 | Rate: 0.49 | Evals: 45.0%

Iter 126 | Best: -6.308369 | Rate: 0.49 | Evals: 45.3%

Iter 127 | Best: -6.308369 | Curr: -2.408169 | Rate: 0.48 | Evals: 45.7%

Iter 128 | Best: -6.308537 | Rate: 0.49 | Evals: 46.0%

Iter 129 | Best: -6.308537 | Curr: -2.433295 | Rate: 0.48 | Evals: 46.3%

Iter 130 | Best: -6.309349 | Rate: 0.49 | Evals: 46.7%

Iter 131 | Best: -6.309715 | Rate: 0.49 | Evals: 47.0%

Iter 132 | Best: -6.312788 | Rate: 0.50 | Evals: 47.3%

Iter 133 | Best: -6.319245 | Rate: 0.50 | Evals: 47.7%

Iter 134 | Best: -6.329490 | Rate: 0.51 | Evals: 48.0%

Iter 135 | Best: -6.329490 | Curr: -6.328972 | Rate: 0.50 | Evals: 48.3%

Iter 136 | Best: -6.329490 | Curr: -1.132906 | Rate: 0.49 | Evals: 48.7%

Iter 137 | Best: -6.329490 | Curr: -2.032633 | Rate: 0.49 | Evals: 49.0%

Iter 138 | Best: -6.332480 | Rate: 0.50 | Evals: 49.3%

Iter 139 | Best: -6.332480 | Curr: -2.452340 | Rate: 0.49 | Evals: 49.7%

Iter 140 | Best: -6.343582 | Rate: 0.50 | Evals: 50.0%

Iter 141 | Best: -6.346320 | Rate: 0.50 | Evals: 50.3%

Iter 142 | Best: -6.346320 | Curr: -6.346154 | Rate: 0.50 | Evals: 50.7%

Iter 143 | Best: -6.347432 | Rate: 0.51 | Evals: 51.0%

Iter 144 | Best: -6.347432 | Curr: -2.477130 | Rate: 0.51 | Evals: 51.3%

Iter 145 | Best: -6.347432 | Curr: -2.080753 | Rate: 0.50 | Evals: 51.7%

Iter 146 | Best: -6.349563 | Rate: 0.50 | Evals: 52.0%

Iter 147 | Best: -6.350449 | Rate: 0.50 | Evals: 52.3%

Iter 148 | Best: -6.351700 | Rate: 0.51 | Evals: 52.7%

Iter 149 | Best: -6.352046 | Rate: 0.51 | Evals: 53.0%

Iter 150 | Best: -6.352046 | Curr: -2.512516 | Rate: 0.50 | Evals: 53.3%

Iter 151 | Best: -6.352232 | Rate: 0.50 | Evals: 53.7%

Iter 152 | Best: -6.352232 | Curr: -2.525852 | Rate: 0.50 | Evals: 54.0%

Iter 153 | Best: -6.352318 | Rate: 0.50 | Evals: 54.3%

Iter 154 | Best: -6.352318 | Curr: -2.526499 | Rate: 0.50 | Evals: 54.7%

Iter 155 | Best: -6.352318 | Curr: -2.527085 | Rate: 0.50 | Evals: 55.0%

Iter 156 | Best: -6.352318 | Curr: -6.352275 | Rate: 0.50 | Evals: 55.3%

Iter 157 | Best: -6.352318 | Curr: -0.793356 | Rate: 0.49 | Evals: 55.7%

Iter 158 | Best: -6.352633 | Rate: 0.49 | Evals: 56.0%

Iter 159 | Best: -6.352633 | Curr: -6.352542 | Rate: 0.49 | Evals: 56.3%

Iter 160 | Best: -6.352633 | Curr: -2.525323 | Rate: 0.49 | Evals: 56.7%

Iter 161 | Best: -6.352940 | Rate: 0.49 | Evals: 57.0%

Iter 162 | Best: -6.352940 | Curr: -2.556321 | Rate: 0.48 | Evals: 57.3%

Iter 163 | Best: -6.361329 | Rate: 0.49 | Evals: 57.7%

Iter 164 | Best: -6.375884 | Rate: 0.50 | Evals: 58.0%

Iter 165 | Best: -6.378251 | Rate: 0.51 | Evals: 58.3%

Iter 166 | Best: -6.380502 | Rate: 0.51 | Evals: 58.7%

Iter 167 | Best: -6.380502 | Curr: -6.380121 | Rate: 0.50 | Evals: 59.0%

Iter 168 | Best: -6.384125 | Rate: 0.51 | Evals: 59.3%

Iter 169 | Best: -6.384125 | Curr: -2.598851 | Rate: 0.50 | Evals: 59.7%

Iter 170 | Best: -6.384125 | Curr: -2.596444 | Rate: 0.49 | Evals: 60.0%

Iter 171 | Best: -6.384125 | Curr: -0.865635 | Rate: 0.48 | Evals: 60.3%

Iter 172 | Best: -6.384125 | Curr: -2.592670 | Rate: 0.47 | Evals: 60.7%

Iter 173 | Best: -6.385428 | Rate: 0.47 | Evals: 61.0%

Iter 174 | Best: -6.385464 | Rate: 0.47 | Evals: 61.3%

Iter 175 | Best: -6.385669 | Rate: 0.48 | Evals: 61.7%

Iter 176 | Best: -6.385710 | Rate: 0.49 | Evals: 62.0%

Iter 177 | Best: -6.385845 | Rate: 0.49 | Evals: 62.3%

Iter 178 | Best: -6.385845 | Curr: -6.385072 | Rate: 0.49 | Evals: 62.7%

Iter 179 | Best: -6.385845 | Curr: -6.383327 | Rate: 0.49 | Evals: 63.0%

Iter 180 | Best: -6.385956 | Rate: 0.49 | Evals: 63.3%

Iter 181 | Best: -6.387618 | Rate: 0.50 | Evals: 63.7%

Iter 182 | Best: -6.388747 | Rate: 0.51 | Evals: 64.0%

Iter 183 | Best: -6.388747 | Curr: -6.387081 | Rate: 0.51 | Evals: 64.3%

Iter 184 | Best: -6.388747 | Curr: -2.086196 | Rate: 0.50 | Evals: 64.7%

Iter 185 | Best: -6.390911 | Rate: 0.51 | Evals: 65.0%

Iter 186 | Best: -6.392838 | Rate: 0.51 | Evals: 65.3%

Iter 187 | Best: -6.393812 | Rate: 0.52 | Evals: 65.7%

Iter 188 | Best: -6.393812 | Curr: -6.391312 | Rate: 0.51 | Evals: 66.0%

Iter 189 | Best: -6.394898 | Rate: 0.51 | Evals: 66.3%

Iter 190 | Best: -6.395340 | Rate: 0.52 | Evals: 66.7%

Iter 191 | Best: -6.396468 | Rate: 0.53 | Evals: 67.0%

Iter 192 | Best: -6.396468 | Curr: -1.098505 | Rate: 0.52 | Evals: 67.3%

Iter 193 | Best: -6.396468 | Curr: -2.604949 | Rate: 0.52 | Evals: 67.7%

Iter 194 | Best: -6.396468 | Curr: -6.396463 | Rate: 0.51 | Evals: 68.0%

Iter 195 | Best: -6.396468 | Curr: -2.606466 | Rate: 0.51 | Evals: 68.3%

Iter 196 | Best: -6.396468 | Curr: -2.622660 | Rate: 0.51 | Evals: 68.7%

Iter 197 | Best: -6.396985 | Rate: 0.52 | Evals: 69.0%

Iter 198 | Best: -6.397630 | Rate: 0.53 | Evals: 69.3%

Iter 199 | Best: -6.397630 | Curr: -2.621368 | Rate: 0.53 | Evals: 69.7%

Iter 200 | Best: -6.397903 | Rate: 0.53 | Evals: 70.0%

Iter 201 | Best: -6.397903 | Curr: -2.628173 | Rate: 0.53 | Evals: 70.3%

Iter 202 | Best: -6.397903 | Curr: -2.170577 | Rate: 0.53 | Evals: 70.7%

Iter 203 | Best: -6.397922 | Rate: 0.54 | Evals: 71.0%

Iter 204 | Best: -6.398445 | Rate: 0.55 | Evals: 71.3%

Iter 205 | Best: -6.398445 | Curr: -2.638541 | Rate: 0.55 | Evals: 71.7%

Iter 206 | Best: -6.398719 | Rate: 0.56 | Evals: 72.0%

Iter 207 | Best: -6.398719 | Curr: -1.711353 | Rate: 0.56 | Evals: 72.3%

Iter 208 | Best: -6.398719 | Curr: -2.657427 | Rate: 0.55 | Evals: 72.7%

Iter 209 | Best: -6.398719 | Curr: -6.398457 | Rate: 0.54 | Evals: 73.0%

Iter 210 | Best: -6.404110 | Rate: 0.55 | Evals: 73.3%

Iter 211 | Best: -6.404110 | Curr: -2.166475 | Rate: 0.54 | Evals: 73.7%

Iter 212 | Best: -6.404110 | Curr: -2.679683 | Rate: 0.53 | Evals: 74.0%

Iter 213 | Best: -6.413157 | Rate: 0.53 | Evals: 74.3%

Iter 214 | Best: -6.413157 | Curr: -2.674059 | Rate: 0.52 | Evals: 74.7%

Iter 215 | Best: -6.424805 | Rate: 0.53 | Evals: 75.0%

Iter 216 | Best: -6.424805 | Curr: -6.424191 | Rate: 0.52 | Evals: 75.3%

Iter 217 | Best: -6.441167 | Rate: 0.52 | Evals: 75.7%

Iter 218 | Best: -6.441167 | Curr: -2.720631 | Rate: 0.52 | Evals: 76.0%

Iter 219 | Best: -6.446234 | Rate: 0.53 | Evals: 76.3%

Iter 220 | Best: -6.446234 | Curr: -2.773480 | Rate: 0.52 | Evals: 76.7%

Iter 221 | Best: -6.446234 | Curr: -2.776241 | Rate: 0.52 | Evals: 77.0%

Iter 222 | Best: -6.457461 | Rate: 0.52 | Evals: 77.3%

Iter 223 | Best: -6.466672 | Rate: 0.53 | Evals: 77.7%

Iter 224 | Best: -6.466672 | Curr: -6.458645 | Rate: 0.52 | Evals: 78.0%

Iter 225 | Best: -6.466672 | Curr: -2.231609 | Rate: 0.51 | Evals: 78.3%

Iter 226 | Best: -6.466672 | Curr: -2.773635 | Rate: 0.50 | Evals: 78.7%

Iter 227 | Best: -6.466672 | Curr: -2.802841 | Rate: 0.50 | Evals: 79.0%

Iter 228 | Best: -6.466672 | Curr: -2.495180 | Rate: 0.49 | Evals: 79.3%

Iter 229 | Best: -6.493461 | Rate: 0.50 | Evals: 79.7%

Iter 230 | Best: -6.493461 | Curr: -6.483192 | Rate: 0.49 | Evals: 80.0%

Iter 231 | Best: -6.536600 | Rate: 0.49 | Evals: 80.3%

Iter 232 | Best: -6.536600 | Curr: -1.217899 | Rate: 0.48 | Evals: 80.7%

Iter 233 | Best: -6.536600 | Curr: -6.526670 | Rate: 0.47 | Evals: 81.0%

Iter 234 | Best: -6.549792 | Rate: 0.47 | Evals: 81.3%

Iter 235 | Best: -6.549792 | Curr: -2.565535 | Rate: 0.47 | Evals: 81.7%

Iter 236 | Best: -6.551282 | Rate: 0.48 | Evals: 82.0%

Iter 237 | Best: -6.551282 | Curr: -2.612639 | Rate: 0.48 | Evals: 82.3%

Iter 238 | Best: -6.551282 | Curr: -6.550871 | Rate: 0.47 | Evals: 82.7%

Iter 239 | Best: -6.551282 | Curr: -2.764723 | Rate: 0.47 | Evals: 83.0%

Iter 240 | Best: -6.551282 | Curr: -2.834517 | Rate: 0.46 | Evals: 83.3%

Iter 241 | Best: -6.556688 | Rate: 0.46 | Evals: 83.7%

Iter 242 | Best: -6.556688 | Curr: -6.433503 | Rate: 0.46 | Evals: 84.0%

Iter 243 | Best: -6.556688 | Curr: -6.554173 | Rate: 0.45 | Evals: 84.3%

Iter 244 | Best: -6.556688 | Curr: -6.556254 | Rate: 0.45 | Evals: 84.7%

Iter 245 | Best: -6.609256 | Rate: 0.46 | Evals: 85.0%

Iter 246 | Best: -6.609256 | Curr: -6.602122 | Rate: 0.45 | Evals: 85.3%

Iter 247 | Best: -6.629082 | Rate: 0.45 | Evals: 85.7%

Iter 248 | Best: -6.629082 | Curr: -6.625742 | Rate: 0.44 | Evals: 86.0%

Iter 249 | Best: -6.629082 | Curr: -2.769016 | Rate: 0.43 | Evals: 86.3%

Iter 250 | Best: -6.629082 | Curr: -1.160445 | Rate: 0.43 | Evals: 86.7%

Iter 251 | Best: -6.629082 | Curr: -6.593592 | Rate: 0.42 | Evals: 87.0%

Iter 252 | Best: -6.644865 | Rate: 0.43 | Evals: 87.3%

Iter 253 | Best: -6.644865 | Curr: -2.848471 | Rate: 0.42 | Evals: 87.7%

Iter 254 | Best: -6.644865 | Curr: -2.883951 | Rate: 0.42 | Evals: 88.0%

Iter 255 | Best: -6.656519 | Rate: 0.43 | Evals: 88.3%

Iter 256 | Best: -6.656519 | Curr: -2.883172 | Rate: 0.43 | Evals: 88.7%

Iter 257 | Best: -6.656519 | Curr: -6.650707 | Rate: 0.43 | Evals: 89.0%

Iter 258 | Best: -6.656519 | Curr: -2.940141 | Rate: 0.42 | Evals: 89.3%

Iter 259 | Best: -6.656519 | Curr: -2.957787 | Rate: 0.42 | Evals: 89.7%

Iter 260 | Best: -6.656519 | Curr: -2.957092 | Rate: 0.42 | Evals: 90.0%

Iter 261 | Best: -6.681915 | Rate: 0.42 | Evals: 90.3%

Iter 262 | Best: -6.681915 | Curr: -2.769716 | Rate: 0.42 | Evals: 90.7%

Iter 263 | Best: -6.681915 | Curr: -2.769670 | Rate: 0.41 | Evals: 91.0%

Iter 264 | Best: -6.681915 | Curr: -2.974068 | Rate: 0.40 | Evals: 91.3%

Iter 265 | Best: -6.681915 | Curr: -1.776090 | Rate: 0.39 | Evals: 91.7%

Iter 266 | Best: -6.686838 | Rate: 0.39 | Evals: 92.0%

Iter 267 | Best: -6.686838 | Curr: -2.848183 | Rate: 0.39 | Evals: 92.3%

Iter 268 | Best: -6.688091 | Rate: 0.39 | Evals: 92.7%

Iter 269 | Best: -6.690468 | Rate: 0.40 | Evals: 93.0%

Iter 270 | Best: -6.692634 | Rate: 0.41 | Evals: 93.3%

Iter 271 | Best: -6.696097 | Rate: 0.42 | Evals: 93.7%

Iter 272 | Best: -6.696097 | Curr: -2.948536 | Rate: 0.42 | Evals: 94.0%

Iter 273 | Best: -6.702532 | Rate: 0.42 | Evals: 94.3%

Iter 274 | Best: -6.702532 | Curr: -2.886005 | Rate: 0.41 | Evals: 94.7%

Iter 275 | Best: -6.702532 | Curr: -2.869002 | Rate: 0.40 | Evals: 95.0%

Iter 276 | Best: -6.702532 | Curr: -3.228620 | Rate: 0.39 | Evals: 95.3%

Iter 277 | Best: -6.712743 | Rate: 0.39 | Evals: 95.7%

Iter 278 | Best: -6.712743 | Curr: -6.707572 | Rate: 0.39 | Evals: 96.0%

Iter 279 | Best: -6.727136 | Rate: 0.40 | Evals: 96.3%

Iter 280 | Best: -6.727136 | Curr: -2.896340 | Rate: 0.39 | Evals: 96.7%

Iter 281 | Best: -6.734786 | Rate: 0.39 | Evals: 97.0%

Iter 282 | Best: -6.735457 | Rate: 0.39 | Evals: 97.3%

Iter 283 | Best: -6.735457 | Curr: -3.366003 | Rate: 0.39 | Evals: 97.7%

Iter 284 | Best: -6.735457 | Curr: -6.703459 | Rate: 0.39 | Evals: 98.0%

Iter 285 | Best: -6.735457 | Curr: -6.733725 | Rate: 0.38 | Evals: 98.3%

Iter 286 | Best: -6.743722 | Rate: 0.38 | Evals: 98.7%

Iter 287 | Best: -6.743722 | Curr: -2.895962 | Rate: 0.37 | Evals: 99.0%

Iter 288 | Best: -6.744144 | Rate: 0.38 | Evals: 99.3%

Iter 289 | Best: -6.745467 | Rate: 0.38 | Evals: 99.7%

Iter 290 | Best: -6.745467 | Curr: -3.489173 | Rate: 0.37 | Evals: 100.0%print(f"[10D] Sklearn Kriging: min y = {result_micha.fun:.4f} at x = {result_micha.x}")

print(f"Number of function evaluations: {result_micha.nfev}")

print(f"Number of iterations: {result_micha.nit}")[10D] Sklearn Kriging: min y = -6.7455 at x = [2.23602788 2.71699133 2.22531978 2.48054435 2.62573334 1.85333282

2.21989757 1.36205952 1.28722362 1.21556365]

Number of function evaluations: 300

Number of iterations: 2905.2.4 Visualize Optimization Progress

import matplotlib.pyplot as plt

# Plot the optimization progress

plt.figure(figsize=(10, 6))

plt.plot(np.minimum.accumulate(opt_micha.y_), 'b-', linewidth=2)

plt.xlabel('Function Evaluations', fontsize=12)

plt.ylabel('Best Objective Value', fontsize=12)

plt.title('10D Michalewicz: Sklearn Kriging Progress', fontsize=14)

plt.grid(True, alpha=0.3)

plt.tight_layout()

plt.show()

5.2.5 Evaluation of Multiple Repeats

To perform 30 repeats and collect statistics:

# Perform 30 independent runs

n_repeats = 30

results = []

print(f"Running {n_repeats} independent optimizations...")

for i in range(n_repeats):

kernel_i = ConstantKernel(1.0, (1e-2, 1e12)) * Matern(

length_scale=1.0,

length_scale_bounds=(1e-4, 1e2),

nu=2.5

)

surrogate_i = GaussianProcessRegressor(kernel=kernel_i, n_restarts_optimizer=100)

opt_i = SpotOptim(

fun=fun,

bounds=bounds,

n_initial=n_initial,

max_iter=max_iter,

surrogate=surrogate_i,

seed=seed + i, # Different seed for each run

verbose=0

)

result_i = opt_i.optimize()

results.append(result_i.fun)

if (i + 1) % 10 == 0:

print(f" Completed {i + 1}/{n_repeats} runs")

# Compute statistics

mean_result = np.mean(results)

std_result = np.std(results)

min_result = np.min(results)

max_result = np.max(results)

print(f"\nResults over {n_repeats} runs:")

print(f" Mean of best values: {mean_result:.6f}")

print(f" Std of best values: {std_result:.6f}")

print(f" Min of best values: {min_result:.6f}")

print(f" Max of best values: {max_result:.6f}")5.3 Comparison: SpotOptim vs SpotPython

The SpotOptim package provides a scipy-compatible interface for Bayesian optimization with the following key features:

- Scipy-compatible API: Returns

OptimizeResultobjects that work seamlessly with scipy’s optimization ecosystem - Custom Surrogates: Supports any sklearn-compatible surrogate model (as demonstrated with GaussianProcessRegressor)

- Flexible Interface: Simplified parameter specification with bounds, n_initial, and max_iter

- Analytical Test Functions: Built-in test functions (rosenbrock, ackley, michalewicz) for benchmarking

The main differences from spotpython are:

- SpotOptim: Uses

bounds,n_initial,max_iterparameters with scipy-style interface - SpotPython: Uses

fun_control,design_control,surrogate_controlwith more complex configuration

Both packages support custom surrogates and provide powerful Bayesian optimization capabilities.

5.4 Summary

This notebook demonstrated how to:

- Use

SpotOptimwith sklearn’s Gaussian Process Regressor (Matern kernel) as a surrogate - Optimize 6D Rosenbrock function with 100 evaluations

- Optimize 10D Michalewicz function with 300 evaluations

- Visualize optimization progress

- Perform multiple independent runs for statistical analysis

The results show that SpotOptim with sklearn surrogates provides effective Bayesian optimization for challenging benchmark functions.

5.5 Jupyter Notebook

- The Jupyter-Notebook of this chapter is available on GitHub in the Sequential Parameter Optimization Cookbook Repository